OpenStack Mitaka集成Ceph Jewel安装部署文档

目录

系统部署环境

系统配置

OpenStack部署平台包含2个节点,ceph存储包括一个单节点,系统配置如下表所示。

| 序号 | IP | 主机名 | 角色 | 内存 | 磁盘 | 操作系统 |

| 1 | 192.168.0.4101.101.101.81 | controller | 控制节点管理节点 | 8GB | 40G | CentOS Linux release 7.2.1511 minimal |

| 2 | 192.168.0.9101.101.101.82 | compute | openstack存储节点计算节点 | 8GB | 40G | CentOS Linux release 7.2.1511 minimal |

| 3 | 192.168.0.208 | ceph | ceph节点 | 2GB | 2个20G | CentOS Linux release 7.2.1511 |

其中,我们选取controller节点作为OpenStack的管理节点和控制节点,选取compute作为计算节点。

网络配置

本部署采用self-service网络架构。

基本配置

Hosts文件同步以及节点间免ssh互联

所有节点/etc/hosts文件配置所有节点ip与主机名的映射关系。

[root@controller ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.4 controller

192.168.0.9 compute

所有节点均有所有节点的IP与主机名映射信息

下面以计算节点为例子进行ssh的配置,其他所有节点均是这样的操作

[root@compute ~]# ssh-keygen -t rsa

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Created directory ‘/root/.ssh’.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

6c:e6:e3:e6:bc:c2:00:0a:bd:86:00:5b:4a:87:f9:b9 root@compute

The key’s randomart image is:

+–[ RSA 2048]—-+

| o |

|.+.. |

|o=o . |

|= oo . |

|oo o. S |

|o oE. + |

| . o o |

| oo.. |

| +=. |

+—————–+

[root@compute ~]# ssh-copy-id -i compute //与自己本身进行免密码互联

The authenticity of host ‘compute (192.168.0.9)’ can’t be established.

ECDSA key fingerprint is f2:56:78:dd:48:7f:f5:c4:bf:70:96:17:11:98:7a:30.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed — if you are prompted now it is to install the new keys

root@compute’s password:

Number of key(s) added: 1

Now try logging into the machine, with: “ssh ‘compute’”

and check to make sure that only the key(s) you wanted were added.

[root@compute ~]# ssh-copy-id -i controller //与控制节点进行免密码ssh互联

The authenticity of host ‘controller (192.168.0.4)’ can’t be established.

ECDSA key fingerprint is 92:6f:a0:74:6d:cd:b8:cc:7c:b9:6e:de:5d:d2:c4:14.

Are you sure you want to continue connecting (yes/no)? yes

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed — if you are prompted now it is to install the new keys

root@controller’s password:

Number of key(s) added: 1

Now try logging into the machine, with: “ssh ‘controller’”

and check to make sure that only the key(s) you wanted were added.

时间同步

安装NTP(Network Time Protocol)协议实现软件Chrony,保证管理节点与计算节点之间的时间同步。

- 管理节点controller上执行以下操作:

安装chrony组件

# yum install chrony -y

修改配置文件

# vim /etc/chrony.conf

修改时钟服务器

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

追加子网段,允许其他节点可以连接到Controller的chrony daemon

allow 192.168.0.0/24

开机自启动并启动NTP服务

# systemctl enable chronyd.service

# systemctl restart chronyd.service

安装chrony组件

# yum install chrony -y

修改配置文件

# vim /etc/chrony.conf

修改时钟服务器为controller

server controller iburst

开机自启动并启动NTP服务

# systemctl enable chronyd.service

# systemctl start chronyd.service

- 验证时间同步服务

在controller节点上验证NTP服务

# chronyc sources

在其他节点上验证NTP服务

# chronyc sources

注:如果其他节点上时间服务器未参考controller服务器,尝试重启(systemctl restart chronyd.service)后查看。

注意要设置时区一致:

查看时区

# date +%z

如果不一致,可修改时区为东八区

#cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

OpenStack安装包

openstack控制节点与计算节点需要执行以下操作,完成OpenStack安装包的下载与更新。

OpenStack RPM软件仓库

# yum install centos-release-openstack-mitaka -y

更新包

# yum upgrade

安装OpenStack python客户端

# yum install python-openstackclient -y

安装openstack-selinux包用于OpenStack服务的自动管理

# yum install openstack-selinux -y

其他安装配置

以下将安装配置SQL数据库、NoSQL数据库、消息队列、缓存等组件,通常这些组件安装在controller管理节点上,为OpenStack认证、镜像、计算等服务的基本组件。

- SQL数据库安装配置

安装mariadb mysql

# yum install mariadb mariadb-server python2-PyMySQL -y

创建openstack mysql配置文件

# vim /etc/my.cnf.d/openstack.cnf

绑定 controller节点的IP地址

[mysqld]

bind-address = 192.168.0.4

default-storage-engine = innodb

innodb_file_per_table

collation-server = utf8_general_ci

character-set-server = utf8

启动mariadb服务

# systemctl enable mariadb.service

# systemctl start mariadb.service

MariaDB的安全性配置

# mysql_secure_installation

Set root password? [Y/n]Y

设置数据库密码为123456

其他默认

- NoSQL数据库安装配置

Telemetry服务需要用到NoSQL数据库保存信息,controller 节点上需要安装MongoDB

# yum install mongodb-server mongodb -y

修改配置文件

# vim /etc/mongod.conf

指定controller 节点IP

bind_ip = 192.168.0.4

日志小文件选项

smallfiles = true

MongoDB服务启动

# systemctl enable mongod.service

# systemctl start mongod.service

- MQ安装配置

安装RabbitMQ消息队列服务

# yum install rabbitmq-server -y

服务自启动

# systemctl enable rabbitmq-server.service

# systemctl start rabbitmq-server.service

创建消息队列用户openstack

# rabbitmqctl add_user openstack RABBIT_PASS //密码设置为RABBIT_PASS

设置openstack用户的配置、读写权限

# rabbitmqctl set_permissions openstack “.*” “.*” “.*”

Memcached安装配置

Identity身份认证服务需要用到缓存

安装

# yum install memcached python-memcached -y

服务启动

# systemctl enable memcached.service

# systemctl start memcached.service

认证服务

Identity集中管理认证、授权、目录服务,其他服务与Identity服务协同,将利用其作为通用统一的API。

Identity包含Server、Drivers、Modules组件。Server是一个集中化服务器,通过RESTful借口提供认证和授权服务。Drivers又称后端服务,集成到Server中,用于获取OpenStack的身份信息。Modules运行于OpenStack组件的地址空间,这些模块拦截服务请求、提取用户证书、并发送给认证服务器来进行认证,利用Python Web Server Gateway接口将中间件模块和OpenStack集成。以下操作均在openstack管理节点操作,既控制节点上。

基本配置

- 数据库配置

创建数据库实例和数据库用户

$ mysql -u root –p

在数据库客户端中执行以下操作:

执行创建命令

CREATE DATABASE keystone;

执行数据库用户授权

GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@’localhost’ \

IDENTIFIED BY ‘KEYSTONE_DBPASS’;

GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@’%’ \

IDENTIFIED BY ‘KEYSTONE_DBPASS’;

openssl产生随机数作为初始化配置Token

$ openssl rand -hex 10

db8a90c712a682517585

- 安装和配置组件

认证服务需要开启Apache服务器的5000和35357的端口

安装软件包

# yum install openstack-keystone httpd mod_wsgi -y

编辑配置文件

# vim /etc/keystone/keystone.conf

编辑以下选项

[DEFAULT]

…

admin_token =db8a90c712a682517585

注明:此处token为openssl生成的随机数

[database]

…

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@Controller/keystone

[token]

…

provider = fernet

将认证服务填入数据库

# su -s /bin/sh -c “keystone-manage db_sync” keystone

初始化Fernet键

# keystone-manage fernet_setup –keystone-user keystone –keystone-group keystone

配置Apache HTTP服务器

修改服务器主机名

# vim /etc/httpd/conf/httpd.conf

ServerName controller

创建配置文件

# vim /etc/httpd/conf.d/wsgi-keystone.conf

Listen 5000

Listen 35357

<VirtualHost *:5000>

WSGIDaemonProcess keystone-public processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-public

WSGIScriptAlias / /usr/bin/keystone-wsgi-public

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat “%{cu}t %M”

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

<Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost>

<VirtualHost *:35357>

WSGIDaemonProcess keystone-admin processes=5 threads=1 user=keystone group=keystone display-name=%{GROUP}

WSGIProcessGroup keystone-admin

WSGIScriptAlias / /usr/bin/keystone-wsgi-admin

WSGIApplicationGroup %{GLOBAL}

WSGIPassAuthorization On

ErrorLogFormat “%{cu}t %M”

ErrorLog /var/log/httpd/keystone-error.log

CustomLog /var/log/httpd/keystone-access.log combined

<Directory /usr/bin>

Require all granted

</Directory>

</VirtualHost>

启动服务

# systemctl enable httpd.service

# systemctl start httpd.service

浏览器可访问localhost:5000 和 localhost:35357

创建服务体和API端点

设置临时环境变量

$ export OS_TOKEN=db8a90c712a682517585

注明:其中OS_TOKEN为之前openssl生成的随机数。

$ export OS_URL=http://controller:35357/v3

$ export OS_IDENTITY_API_VERSION=3

创建服务实体和API端点

$ openstack service create \

–name keystone –description “OpenStack Identity” identity

$ openstack endpoint create –region RegionOne \

identity public http://controller:5000/v3

$ openstack endpoint create –region RegionOne \

identity internal http://controller:5000/v3

$ openstack endpoint create –region RegionOne \

identity admin http://controller:35357/v3

创建域、工程、用户及角色

创建默认域

$ openstack domain create –description “Default Domain” default

创建管理工程

$ openstack project create –domain default \

–description “Admin Project” admin

创建admin用户

$ openstack user create –domain default \

–password-prompt admin

User Password:(123456)

Repeat User Password:(123456)

创建角色

$ openstack role create admin

增加管理角色至admin工程和用户

$ openstack role add –project admin –user admin admin

与以上操作类似,创建一个包含一个唯一用户的服务工程

$ openstack project create –domain default \

–description “Service Project” service

$ openstack project create –domain default \

–description “Demo Project” demo

$ openstack user create –domain default \

–password-prompt demo

(passwd:123456)

$ openstack role create user

$ openstack role add –project demo –user demo user

验证操作

在管理节点上执行以下操作

- 鉴于安全因素,移除临时的token认证授权机制

# vim /etc/keystone/keystone-paste.ini

将[pipeline:public_api], [pipeline:admin_api],和[pipeline:api_v3]中的admin_token_auth移除,注意不是删除或注释该行。

- 取消设置环境变量

$ unset OS_TOKEN OS_URL

- 请求认证环

利用admin用户请求

$ openstack –os-auth-url http://Controller:35357/v3 \

–os-project-domain-name default –os-user-domain-name default \

–os-project-name admin –os-username admin token issue

利用demo用户请求

$ openstack –os-auth-url http://Controller:5000/v3 \

–os-project-domain-name default –os-user-domain-name default \

–os-project-name demo –os-username demo token issue

创建OpenStack客户端环境脚本

前面操作是利用环境变量及OpenStack客户端命令行方式与认证服务交互,OpenStack可以通过OpenRC脚本文件来提高认证效率。

$ vim admin-openrc

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

$ vim demo-openrc

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

增加脚本执行权限

$ chmod +x admin-openrc

$ chmod +x demo-openrc

可以通过执行以上脚本来快速切换工程及用户,例如:

$ . admin-openrc

请求认证Token

$ openstack token issue

镜像服务

本文部署在管理节点既控制节点上。

基本配置

登录mysql客户端,创建表及用户,并授予相应的数据库权限

[root@controller ~]# mysql -u root -p

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@’localhost’ \

IDENTIFIED BY ‘GLANCE_DBPASS’;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@’%’ \

IDENTIFIED BY ‘GLANCE_DBPASS’;

(注:密码采用了默认)

用户认证

利用客户端脚本获取认证

$ . admin-openrc

创建glance用户

$ openstack user create –domain default –password-prompt glance

User Password:(123456)

Repeat User Password:

$ openstack role add –project service –user glance admin

$ openstack service create –name glance \

–description “OpenStack Image” image

创建镜像服务API端点

$ openstack endpoint create –region RegionOne \

image public http://controller:9292

$ openstack endpoint create –region RegionOne \

image internal http://controller:9292

$ openstack endpoint create –region RegionOne \

image admin http://controller:9292

安装配置

安装glance包

# yum install openstack-glance -y

配置/etc/glance/glance-api.conf

# vim /etc/glance/glance-api.conf

按照以下选项进行编辑(红色部分为glance数据库密码)

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

配置/etc/glance/glance-registry.conf

# vim /etc/glance/glance-registry.conf

按照以下选项进行编辑(红色部分注意替换为你实际设置的数据库glance密码)

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

服务填充至数据库

# su -s /bin/sh -c “glance-manage db_sync” glance

结束安装

# systemctl enable openstack-glance-api.service \

openstack-glance-registry.service

# systemctl start openstack-glance-api.service \

openstack-glance-registry.service

验证操作

切换admin用户

$ . admin-openrc

下载源镜像

$ wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

镜像上传并设置属性

$ openstack image create “cirros” –file cirros-0.3.4-x86_64-disk.img –disk-format qcow2 –container-format bare –public

验证是否成功

$ openstack image list

计算服务

OpenStack计算服务主要包括以下组件:nova-api服务、nova-api-metadata服务、nova-compute服务、nova-scheduler服务、nova-conductor模块、nova-cert模块、nova-network worker模块、nova-consoleauth模块、nova-novncproxy守护进程、nova-spicehtml5proxy守护进程、nova-xvpvncproxy守护进程、nova-cert守护进程、nova客户端、队列、SQL数据库。

管理节点(控制节点)上安装与配置

基本配置

创建数据库表及用户

[root@controller ~]# mysql -uroot -p123456

执行以下SQL命令

CREATE DATABASE nova_api;

CREATE DATABASE nova;

GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@’localhost’ \

IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@’%’ \

IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@’localhost’ \

IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@’%’ \

IDENTIFIED BY ‘NOVA_DBPASS’;

切换用户获取认证

$ . admin-openrc

创建nova用户

$ openstack user create –domain default \

–password-prompt nova

User Password:(123456)

Repeat User Password:

增加admin角色

$ openstack role add –project service –user nova admin

创建nova服务实体

$ openstack service create –name nova \

–description “OpenStack Compute” compute

创建计算服务的API endpoints

$ openstack endpoint create –region RegionOne \

compute public http://controller:8774/v2.1/%\(tenant_id\)s

$ openstack endpoint create –region RegionOne \

compute internal http://controller:8774/v2.1/%\(tenant_id\)s

$ openstack endpoint create –region RegionOne \

compute admin http://controller:8774/v2.1/%\(tenant_id\)s

安装配置

安装软件包

# yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler -y

nova配置

修改配置文件

# vim /etc/nova/nova.conf

注:将红色部分修改称个人配置

[DEFAULT]

enabled_apis = osapi_compute,metadata

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 192.168.0.4

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriveserver_proxyclient_address = $my_ip

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456

[vnc]

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

将配置写入到数据库中

# su -s /bin/sh -c “nova-manage api_db sync” nova

# su -s /bin/sh -c “nova-manage db sync” nova

nova服务自启动与启动

# systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

# systemctl start openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

计算节点(compute)上安装与配置

在安装计算节点前需要设置OpenStack的源码包,参考 系统环境àOpenStack安装包的步骤。

安装nova compute包

# yum install openstack-nova-compute -y

在其中一台compute修改配置文件,然后拷贝到所有(若是有多台计算节点)

# vim /etc/nova/nova.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 192.168.0.9

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

判断是否支持虚拟机硬件加速

$ egrep -c ‘(vmx|svm)’ /proc/cpuinfo

如果返回0,标识不支持硬件加速,修改配置文件中[libvirt]选项为qemu,如果返回1或者大于1的数字,修改[libvirt]选项为kvm

[libvirt]

virt_type = qemu

服务启动

# systemctl enable libvirtd.service openstack-nova-compute.service

# systemctl start libvirtd.service openstack-nova-compute.service

所有节点确保nova.conf文件属组

问题1:利用scp命令将配置文件拷贝到其他计算节点,修改IP后启动时,发现无法启动服务,错误为:Failed to open some config files: /etc/nova/nova.conf,主要原因是配置权限错误,注意权限:chown root:nova nova.conf

验证操作

控制节点操作

$ . admin-openrc

列出服务组件,用于验证每个过程是否成功

$ openstack compute service list

网络服务

不同的服务器硬件网络配置选项不同。实体机与虚拟机有差别。根据自身情况进行参考官网http://docs.openstack.org/mitaka/zh_CN/install-guide-rdo/neutron.html进行自行配置。本例属于虚拟机。

管理节点(控制节点)上安装与配置

基本配置

[root@controller ~]# mysql -uroot -p123456

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@’localhost’ \

IDENTIFIED BY ‘NEUTRON_DBPASS’;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@’%’ \

IDENTIFIED BY ‘NEUTRON_DBPASS’;

执行脚本

$ . admin-openrc

创建neutron用户及增加admin角色

$ openstack user create –domain default –password-prompt neutron

User Password:(123456)

Repeat User Password:

添加角色

$ openstack role add –project service –user neutron admin

创建neutron实体

$ openstack service create –name neutron \

–description “OpenStack Networking” network

$ openstack endpoint create –region RegionOne \

network public http://controller:9696

$ openstack endpoint create –region RegionOne \

network internal http://controller:9696

$ openstack endpoint create –region RegionOne \

network admin http://controller:9696

配置网络选项

配置网络:有Provider networks和Self-service networks两种类型可选,此处选择Self-service networks。

安装组件

# yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables -y

服务器组件配置(注意替换红色部分)

# vim /etc/neutron/neutron.conf

[database]

connection = mysql+pymysql://neutron:NEUTRON_DBPASS@controller/neutron

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = True

rpc_backend = rabbit

auth_strategy = keystone

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123456

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

# vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan

tenant_network_types =

mechanism_drivers = linuxbridge

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[securitygroup]

enable_ipset = True

# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider: eno33554984

注:eno33554984为第二块网卡设备

[vxlan]

enable_vxlan = False

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

# vim /etc/neutron/l3_agent.ini //我本地虚拟机环境没有配置这个文件。

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

external_network_bridge =

# vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = True

配置元数据代理

# vim /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = METADATA_SECRET

注:METADATA_SECRET为自定义的字符密码,与下文nova.conf中metadata_proxy_shared_secret配置一致。

# vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

service_metadata_proxy = True

metadata_proxy_shared_secret = METADATA_SECRET

结束安装

创建配置文件符号连接

# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

配置存取到数据库

# su -s /bin/sh -c “neutron-db-manage –config-file /etc/neutron/neutron.conf \

–config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head” neutron

重启计算API服务

# systemctl restart openstack-nova-api.service

服务启动

# systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

# systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

所有计算节点上安装与配置

安装组件

# yum install openstack-neutron-linuxbridge ebtables ipset -y

配置通用组件

# vim /etc/neutron/neutron.conf

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

同样方法配置其他节点,利用scp复制配置文件需要修改权限

将配置文件拷贝到其他计算节点,并在其他计算节点上修改文件拥有者权限

# scp /etc/neutron/neutron.conf root@computer02:/etc/neutron/

切换到其他节点

# chown root:neutron /etc/neutron/neutron.conf

配置网络选项

# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider: eno33554984

注:红色部分为PROVIDER_INTERFACE_NAME,应为本计算节点物理网卡名(除开管理网络网卡)

[vxlan]

enable_vxlan = False

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

拷贝到其他计算节点上,注意权限。

配置所有计算节点利用neutron

# vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

结束安装

重启计算服务

# systemctl restart openstack-nova-compute.service

启动Linux桥接代理

# systemctl enable neutron-linuxbridge-agent.service

# systemctl start neutron-linuxbridge-agent.service

验证操作

在管理节点上执行以下操作

$ . admin-openrc

$ neutron ext-list

$ neutron agent-list

Dashboard服务

控制节点安装配置

安装包

# yum install openstack-dashboard -y

修改配置

# vim /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = “controller”

ALLOWED_HOSTS = [‘*’, ]

SESSION_ENGINE = ‘django.contrib.sessions.backends.cache’

CACHES = {

‘default’: {

‘BACKEND’: ‘django.core.cache.backends.memcached.MemcachedCache’,

‘LOCATION’: ‘controller:11211’,

},

}

OPENSTACK_KEYSTONE_URL = “http://%s:5000/v3” % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

“identity”: 3,

“image”: 2,

“volume”: 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = “default”

OPENSTACK_KEYSTONE_DEFAULT_ROLE = “user”

如果选择网络模式1 provider,此处采用

OPENSTACK_NEUTRON_NETWORK = {

…

‘enable_router’: False,

‘enable_quotas’: False,

‘enable_distributed_router’: False,

‘enable_ha_router’: False,

‘enable_lb’: False,

‘enable_firewall’: False,

‘enable_vpn’: False,

‘enable_fip_topology_check’: False,

}

TIME_ZONE = “UTC”

结束安装

# systemctl restart httpd.service memcached.service

验证操作

域填写default,登录用户可为admin或demo

遇到问题:RuntimeError: Unable to create a new session key. It is likely that the cache is unavailable.

将’LOCATION’: ‘controller:11211′,

改为’LOCATION’: 127.0.0.1:11211′,即可

块存储服务

管理(控制节点)节点上安装与配置

基本配置

[root@controller ~]# mysql -uroot -p123456

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@’localhost’ \

IDENTIFIED BY ‘CINDER_DBPASS’;

GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@’%’ \

IDENTIFIED BY ‘CINDER_DBPASS’;

$ . admin-openrc

$ openstack user create –domain default –password-prompt cinder

User Password:(123456)

Repeat User Password:

$ openstack role add –project service –user cinder admin

$ openstack service create –name cinder \

–description “OpenStack Block Storage” volume

$ openstack service create –name cinderv2 \

–description “OpenStack Block Storage” volumev2

创建cinder服务的API endpoints

$ openstack endpoint create –region RegionOne \

volume public http://controller:8776/v1/%\(tenant_id\)s

$ openstack endpoint create –region RegionOne \

volume internal http://controller:8776/v1/%\(tenant_id\)s

$ openstack endpoint create –region RegionOne \

volume admin http://controller:8776/v1/%\(tenant_id\)s

$ openstack endpoint create –region RegionOne \

volumev2 public http://controller:8776/v2/%\(tenant_id\)s

$ openstack endpoint create –region RegionOne \

volumev2 internal http://controller:8776/v2/%\(tenant_id\)s

$ openstack endpoint create –region RegionOne \

volumev2 admin http://controller:8776/v2/%\(tenant_id\)s

安装配置组件

# yum install openstack-cinder -y

# vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

my_ip = 192.168.0.4

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = 123456

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

写入数据库

# su -s /bin/sh -c “cinder-manage db sync” cinder

修改计算配置

# vim /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

重新启动nova

# systemctl restart openstack-nova-api.service

启动cinder

# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

所有存储节点上安装与配置

(本例存储节点即为计算节点)

Cinder安装包

# yum install openstack-cinder -y

若是多台存储节点,执行脚本

ssh computer01 sudo yum install –y openstack-cinder

ssh computer02 sudo yum install –y openstack-cinder

ssh computer03 sudo yum install –y openstack-cinder

computer01-03为osd节点的主机名

修改配置文件

# vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[DEFAULT]

rpc_backend = rabbit

auth_strategy = keystone

enabled_backends = lvm

glance_api_servers = http://controller:9292

my_ip = 192.168.0.9

注明:IP为当前存储节点的管理网IP

[oslo_messaging_rabbit]

rabbit_host = controller

rabbit_userid = openstack

rabbit_password = RABBIT_PASS

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = 123456

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

服务启动

# systemctl enable openstack-cinder-volume.service

# systemctl start openstack-cinder-volume.service

多存储节点执行脚本

ssh computer01 sudo systemctl enable openstack-cinder-volume.service

ssh computer02 sudo systemctl enable openstack-cinder-volume.service

ssh computer03 sudo systemctl enable openstack-cinder-volume.service

ssh computer01 sudo systemctl start openstack-cinder-volume.service

ssh computer02 sudo systemctl start openstack-cinder-volume.service

ssh computer03 sudo systemctl start openstack-cinder-volume.service

验证操作

# . admin-openrc

$ cinder service-list

集成Ceph相关配置

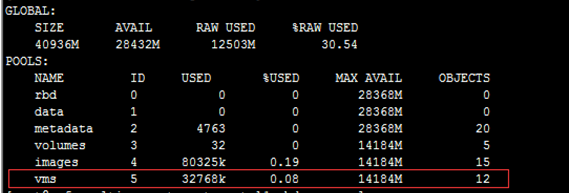

创建Pool

# ceph osd pool create volumes 64

# ceph osd pool create images 64

# ceph osd pool create vms 64

安装Ceph Client包

配置centos7 ceph yum源

在glance-api(控制节点)节点上

yum install python-rbd -y

(计算节点)在nova-compute和cinder-volume节点上

yum install ceph-common -y

openstack安装Ceph客户端认证

集群ceph存储端操作

[root@ceph ~]# ssh controller sudo tee /etc/ceph/ceph.conf < /etc/ceph/ceph.conf

[root@ceph ~]# ssh computesudo tee /etc/ceph/ceph.conf < /etc/ceph/ceph.conf

如果开启了cephx authentication,需要为Nova/Cinder and Glance创建新的用户,如下

ceph auth get-or-create client.cinder mon ‘allow r’ osd ‘allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images’

ceph auth get-or-create client.glance mon ‘allow r’ osd ‘allow class-read object_prefix rbd_children, allow rwx pool=images’

为client.cinder, client.glance添加keyring,如下

ceph auth get-or-create client.glance | ssh controller sudo tee /etc/ceph/ceph.client.glance.keyring

ssh controller sudo chown glance:glance /etc/ceph/ceph.client.glance.keyring

ceph auth get-or-create client.cinder | ssh compute sudo tee /etc/ceph/ceph.client.cinder.keyring

ssh compute sudo chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring

为nova-compute节点上创建临时密钥

ceph auth get-key client.cinder | ssh {your-compute-node} tee client.cinder.key

此处为

ceph auth get-key client.cinder | ssh compute tee client.cinder.key

在所有计算节点上(本例就只有一台计算节点)执行如下操作:在计算节点上为libvert替换新的key

uuidgen

536f43c1-d367-45e0-ae64-72d987417c91

cat > secret.xml <<EOF

粘贴以下内容,注意将红色key替换为新生成的key。

<secret ephemeral=’no’ private=’no’>

<uuid>536f43c1-d367-45e0-ae64-72d987417c91</uuid>

<usage type=’ceph’>

<name>client.cinder secret</name>

</usage>

</secret>

EOF

virsh secret-define –file secret.xml

以下—base64 后的秘钥为计算节点上/root目录下的client.cinder.key。是之前为计算节点创建的临时秘钥文件

virsh secret-set-value –secret 536f43c1-d367-45e0-ae64-72d987417c91 –base64 AQCliYVYCAzsEhAAMSeU34p3XBLVcvc4r46SyA==

[root@compute ~]#rm –f client.cinder.key secret.xml

Openstack配置

在控制节点操作

vim /etc/glance/glance-api.conf

[DEFAULT]

…

default_store = rbd

show_image_direct_url = True

show_multiple_locations = True

…

[glance_store]

stores = rbd

default_store = rbd

rbd_store_pool = images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

rbd_store_chunk_size = 8

取消Glance cache管理,去掉cachemanagement

[paste_deploy]

flavor = keystone

在计算节点操作

vim /etc/cinder/cinder.conf

[DEFAULT]

保留之前的

enabled_backends = ceph

#glance_api_version = 2

…

[ceph]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

glance_api_version = 2

rbd_user = cinder

rbd_secret_uuid =536f43c1-d367-45e0-ae64-72d987417c91

请注意,每个计算节点uuid不同。按照实际情况填写。本例只有一个计算节点

注意,如果配置多个cinder后端,glance_api_version = 2必须添加到[DEFAULT]中。本例注释了

每个计算节点上,设置/etc/nova/nova.conf

vim /etc/nova/nova.conf

[libvirt]

virt_type = qemu

hw_disk_discard = unmap

images_type = rbd

images_rbd_pool = vms

images_rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_user = cinder

rbd_secret_uuid = 536f43c1-d367-45e0-ae64-72d987417c91

disk_cachemodes=”network=writeback”

libvirt_inject_password = false

libvirt_inject_key = false

libvirt_inject_partition = -2

live_migration_flag=VIR_MIGRATE_UNDEFINE_SOURCE, VIR_MIGRATE_PEER2PEER, VIR_MIGRATE_LIVE, VIR_MIGRATE_TUNNELLED

重启OpenStack

控制节点

systemctl restart openstack-glance-api.service

计算节点

systemctl restart openstack-nova-compute.service openstack-cinder-volume.service

网络

创建网络

在控制节点上,加载 admin 凭证来获取管理员能执行的命令访问权限:

[root@controller ~]# . admin-openrc

[root@controller ~]# neutron net-create –shared –provider:physical_network provider \

> –provider:network_type flat provider

创建子网

根据实际情况自行修改,起始IP,dns,网关等信息

[root@controller ~]# neutron subnet-create –name provider –allocation-pool start=192.168.0.10,end=192.168.0.30 –dns-nameserver 192.168.0.1 –gateway 192.168.0.1 provider 192.168.0.0/24

创建m1.nano规格的主机

默认的最小规格的主机需要512 MB内存。对于环境中计算节点内存不足4 GB的,我们推荐创建只需要64 MB的“m1.nano“规格的主机。若单纯为了测试的目的,请使用“m1.nano“规格的主机来加载CirrOS镜像

[root@controller ~]# openstack flavor create –id 0 –vcpus 1 –ram 64 –disk 1 m1.nano

生成一个键值对

大部分云镜像支持公共密钥认证而不是传统的密码认证。在启动实例前,你必须添加一个公共密钥到计算服务。

导入租户“demo“的凭证

[root@controller ~]# . demo-openrc

生成和添加秘钥对

使用本身存在的公钥

[root@controller ~]# openstack keypair create –public-key ~/.ssh/id_rsa.pub mykey

验证公钥的添加

[root@controller ~]# openstack keypair list

增加安全组规则

默认情况下, “default“安全组适用于所有实例并且包括拒绝远程访问实例的防火墙规则。对诸如CirrOS这样的Linux镜像,我们推荐至少允许ICMP (ping) 和安全shell(SSH)规则。

添加规则到default安全组

允许ICMP(ping):

[root@controller ~]# openstack security group rule create –proto icmp default

允许安全shell(ssh)的访问:

[root@controller ~]# openstack security group rule create –proto tcp –dst-port 22 default

测试

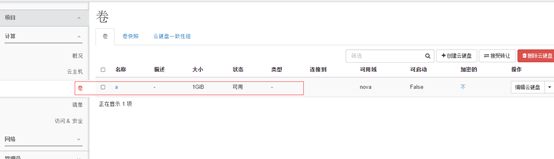

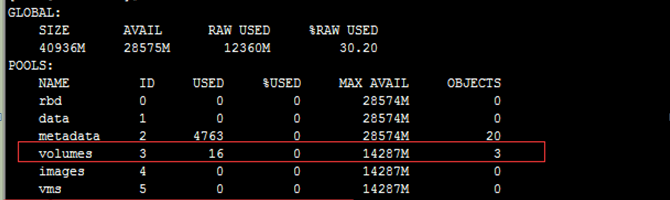

创建卷

创建的卷就是使用的是ceph存储中的volumes池。下面在创建一个卷。

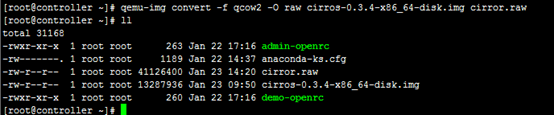

创建镜像

因为与ceph结合,只支持raw格式的镜像。将镜像格式转换

[root@controller ~]# qemu-img convert -f qcow2 -O raw cirros-0.3.4-x86_64-disk.img cirror.raw

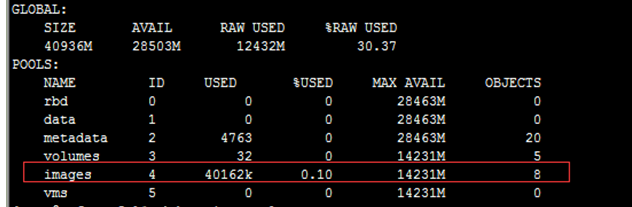

Openstack web端创建镜像

这个镜像就是上传到ceph存储中的。

验证ceph存储

创建云主机

在openstack dashboard界面操作,创建云主机过程

根据情况一步一步自己选择,最后点击启动实例按钮。

验证ceph存储

遇到的问题:在web端进入到云主机的控制端无法获取DNS地址。

解决:在计算节点上修改nova.conf文件将controller改为其IP 192.168.0.4地址 重启nova服务

systemctl restart openstack-nova-compute.service