openstack ocata版block+swift+rbd+radosgw对接安装部署手册

一、文档介绍

本文仅是对openstack ocata版本做基础的安装部署指导,功能接口对接指导。包括基础openstack各个基础组件安装,块存储组件安装, swift对象存储组件安装,ceph rbd对接原cinder,glance, images组件提供分布式块存储功能,ceph radosgw网关对接原swift接口提供对象存储功能。

Openstack各个组件功能介绍本文不作详细解读,该配置指导手册适合测试人员、技支人员做基础功能对接调试用。详情运维以及功能讲解请自行官网查看。

二、环境配置介绍

| 主机名 | ip | 角色 | ||

| controller | 192.168.1.50 | 控制节点 | glance | |

| compute | 192.168.1.51 | 计算节点 | ||

| block_swift | 192.168.1.52 | 存储节点 | 对象存储节点 | |

| radosgw1-3 | 192.168.1.31-33 | ceph rbd节点 | radosgw网关节点 | |

所有节点均为虚拟机centos7.2.1511操作系统,其中ceph节点为3台服务器,本例中只需要主mon mds节点即可。控制节点(controller)和计算节点(compute)均有2块网卡,其中一块配置外网IP,即管理网口,需要上网安装相关软件。另外的网卡则不需要配置IP,在网络服务配置过程中为虚拟机提供网桥服务。block_swift节点(块和对象)上配置2块独立的磁盘,分别用作最基本的块存储和对象存储。ceph节点由3台虚拟机部署组成,提供rbd块存储服务和radosgw网关对象存储服务。

*******所有用户名密码,服务密码,数据库密码均是123456

*******所有节点均关闭防火墙以及selinux

三、基本配置

配置节点网卡ip以及hosts文件

1、控制节点

外网管理网卡配置

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno33554992

IPADDR=192.168.1.50

NETMASK=255.255.0.0

GATEWAY=192.168.0.1

DNS1=192.168.0.1

。。。。。省略

虚拟机网卡配置:

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno33554992

TYPE=”Ethernet”

BOOTPROTO=”none”

DEVICE=”eno33554992″

ONBOOT=”yes”

HDWARE = 00:0c:29:f2:cf:85

UUID = c231a056-9eb3-cd70-1d06-ab0361508f39

[root@controller ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.1.50 controller

192.168.1.51 compute

192.168.1.52 block_swift

192.168.1.31 radosgw1

192.168.1.32 radosgw2

192.168.1.33 radosgw3

2、计算节点

除了外网IP不同外,虚拟机网卡配置一样,当然mac和uuid不同,其他一模一样

3、块存储 和对象存储节点

只有一个网卡,除了外网IP不同外,没有虚拟机管理网卡,其他一模一样。

4、ceph所有节点/etc/hosts文件增加如下记录

192.168.1.50 controller

192.168.1.51 compute

192.168.1.52 block_swift

5、验证

控制节点上操作:

[root@controller ~]# ping www.baidu.com

PING www.a.shifen.com (61.135.169.121) 56(84) bytes of data.

64 bytes from 61.135.169.121: icmp_seq=1 ttl=55 time=19.1 ms

[root@controller ~]# ping compute

PING compute (192.168.1.51) 56(84) bytes of data.

64 bytes from compute (192.168.1.51): icmp_seq=1 ttl=64 time=26.8 ms

64 bytes from compute (192.168.1.51): icmp_seq=2 ttl=64 time=0.182 ms

[root@controller ~]# ping block_swift

PING block_swift (192.168.1.52) 56(84) bytes of data.

64 bytes from block_swift (192.168.1.52): icmp_seq=1 ttl=64 time=1.48 ms

64 bytes from block_swift (192.168.1.52): icmp_seq=2 ttl=64 time=0.196 ms

计算节点操作:

[root@compute ~]# ping www.baidu.com

PING www.a.shifen.com (61.135.169.125) 56(84) bytes of data.

64 bytes from 61.135.169.125: icmp_seq=1 ttl=55 time=23.9 ms

[root@compute ~]# ping controller

PING controller (192.168.1.50) 56(84) bytes of data.

64 bytes from controller (192.168.1.50): icmp_seq=1 ttl=64 time=4.86 ms

64 bytes from controller (192.168.1.50): icmp_seq=2 ttl=64 time=0.208 ms

[root@compute ~]# ping block_swift

PING block_swift (192.168.1.52) 56(84) bytes of data.

64 bytes from block_swift (192.168.1.52): icmp_seq=1 ttl=64 time=0.999 ms

64 bytes from block_swift (192.168.1.52): icmp_seq=2 ttl=64 time=0.202 ms

块节点上操作:

[root@block_swift ~]# ping www.baidu.com

PING www.a.shifen.com (61.135.169.125) 56(84) bytes of data.

64 bytes from 61.135.169.125: icmp_seq=1 ttl=55 time=23.8 ms

[root@block_swift ~]# ping controller

PING controller (192.168.1.50) 56(84) bytes of data.

64 bytes from controller (192.168.1.50): icmp_seq=1 ttl=64 time=0.169 ms

64 bytes from controller (192.168.1.50): icmp_seq=2 ttl=64 time=0.145 ms

[root@block_swift ~]# ping compute

PING compute (192.168.1.51) 56(84) bytes of data.

64 bytes from compute (192.168.1.51): icmp_seq=1 ttl=64 time=1.74 ms

64 bytes from compute (192.168.1.51): icmp_seq=2 ttl=64 time=0.461 ms

至此网络验证OK。

配置NTP服务

安装NTP(Network Time Protocol)协议实现软件Chrony,保证管理节点与计算节点之间的时间同步。

- 管理节点controller上执行以下操作:

安装chrony组件

# yum install chrony -y

修改配置文件

# vim /etc/chrony.conf

修改时钟服务器

server 0.centos.pool.ntp.org iburst

server 1.centos.pool.ntp.org iburst

server 2.centos.pool.ntp.org iburst

server 3.centos.pool.ntp.org iburst

allow 192.168.0.0/24

追加子网段,允许其他节点可以连接到controller的chrony daemon

开机自启动并启动NTP服务

# systemctl enable chronyd.service

# systemctl restart chronyd.service

- 计算节点和块存储节点执行以下操作

安装chrony组件

# yum install chrony -y

修改配置文件

[root@block_swift ~]# cat /etc/chrony.conf

server controller iburst

开机自启动并启动NTP服务

# systemctl enable chronyd.service

# systemctl start chronyd.service

- 验证时间同步服务

在controller节点上验证NTP服务

[root@controller ~]# chronyc sources

210 Number of sources = 4

MS Name/IP address Stratum Poll Reach LastRx Last sample

===========================================================================

^- mx.comglobalit.com 2 6 17 54 +1215us[ +28ms] +/- 179ms

^- dns1.synet.edu.cn 2 6 17 54 -43ms[ -43ms] +/- 60ms

^* 120.25.115.20 2 6 17 56 +27us[ +27ms] +/- 12ms

^- dns2.synet.edu.cn 2 6 17 54 -44ms[ -18ms] +/- 62ms

在其他节点上验证NTP服务

[root@compute ~]# chronyc sources

210 Number of sources = 1

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^? controller 0 6 0 – +0ns[ +0ns] +/- 0ns

注:如果其他节点上时间服务器未参考controller服务器,尝试重启(systemctl restart chronyd.service)后查看。

注意要设置时区一致:

查看时区

# date +%z

如果不一致,可修改时区为东八区

#cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

openStack安装包

openstack控制节点与计算节点、块节点需要执行以下操作,完成OpenStack安装包的下载与更新。

OpenStack RPM软件仓库

# yum install centos-release-openstack-ocata -y

更新包

# yum upgrade

安装OpenStack python客户端

# yum install python-openstackclient -y

安装openstack-selinux包用于OpenStack服务的自动管理

# yum install openstack-selinux -y

其他安装配置

以下将安装配置SQL数据库、NoSQL数据库、消息队列、缓存等组件,通常这些组件安装在controller管理节点上,为OpenStack认证、镜像、计算等服务的基本组件。

- SQL数据库安装配置

安装mariadb mysql

# yum install mariadb mariadb-server python2-PyMySQL -y

创建openstack mysql配置文件

# vim /etc/my.cnf.d/openstack.cnf

绑定 controller节点的IP地址

[mysqld]

bind-address = 192.168.1.50

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

启动mariadb服务

# systemctl enable mariadb.service

# systemctl start mariadb.service

MariaDB的安全性配置

# mysql_secure_installation

Set root password? [Y/n]Y

设置数据库密码为123456

其他默认

- MQ安装配置

安装RabbitMQ消息队列服务

# yum install rabbitmq-server -y

服务自启动

# systemctl enable rabbitmq-server.service

# systemctl start rabbitmq-server.service

创建消息队列用户openstack

# rabbitmqctl add_user openstack 123456 //密码设置为123456

设置openstack用户的配置、读写权限

# rabbitmqctl set_permissions openstack “.*” “.*” “.*”

Memcached安装配置

Identity身份认证服务需要用到缓存

安装

# yum install memcached python-memcached -y

修改配置文件/etc/sysconfig/memcached

OPTIONS=”-l 127.0.0.1,::1,controller”

服务启动

# systemctl enable memcached.service

# systemctl start memcached.service

四、认证服务

Identity集中管理认证、授权、目录服务,其他服务与Identity服务协同,将利用其作为通用统一的API。

Identity包含Server、Drivers、Modules组件。Server是一个集中化服务器,通过RESTful借口提供认证和授权服务。Drivers又称后端服务,集成到Server中,用于获取OpenStack的身份信息。Modules运行于OpenStack组件的地址空间,这些模块拦截服务请求、提取用户证书、并发送给认证服务器来进行认证,利用Python Web Server Gateway接口将中间件模块和OpenStack集成。以下操作均在openstack管理节点操作,既控制节点上。

基本配置

- 数据库配置

创建数据库实例和数据库用户

$ mysql -u root –p123456

在数据库客户端中执行以下操作:

执行创建命令

CREATE DATABASE keystone;

执行数据库用户授权

GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@’localhost’ \

IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@’%’ \

IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@’192.168.1.50’ IDENTIFIED BY ‘123456’;

使用合适的密码替换123456 。退出数据库登入。

- 安装和配置组件

认证服务需要开启Apache服务器的5000和35357的端口

安装软件包

# yum install openstack-keystone httpd mod_wsgi -y

编辑配置文件

# vim /etc/keystone/keystone.conf

[DEFAULT]

[database]

connection = mysql+pymysql://keystone:123456@controller/keystone

[token]

provider = fernet

将认证服务填入数据库

# su -s /bin/sh -c “keystone-manage db_sync” keystone

初始化Fernet键

# keystone-manage fernet_setup –keystone-user keystone –keystone-group keystone

# keystone-manage credential_setup –keystone-user keystone –keystone-group keystone

引导认证服务

# keystone-manage bootstrap –bootstrap-password 123456 \

–bootstrap-admin-url http://controller:35357/v3/ \

–bootstrap-internal-url http://controller:5000/v3/ \

–bootstrap-public-url http://controller:5000/v3/ \

–bootstrap-region-id RegionOne

替换合适的密码123456

配置Apache HTTP服务器

修改服务器主机名

# vim /etc/httpd/conf/httpd.conf

ServerName controller

创建连接文件

# ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

启动服务

# systemctl enable httpd.service

# systemctl start httpd.service

配置管理账户

$ export OS_USERNAME=admin

$ export OS_PASSWORD=123456

$ export OS_PROJECT_NAME=admin

$ export OS_USER_DOMAIN_NAME=default

$ export OS_PROJECT_DOMAIN_NAME=default

$ export OS_AUTH_URL=http://controller:35357/v3

$ export OS_IDENTITY_API_VERSION=3

创建域,工程,用户,角色

身份服务为每个OpenStack服务提供身份验证服务。身份验证服务使用域、项目、用户和角色的组合。

创建service project

[root@controller ~]# openstack project create –domain default –description “Service Project” service

+————-+———————————-+

| Field | Value |

+————-+———————————-+

| description | Service Project |

| domain_id | default |

| enabled | True |

| id | ac4fd866dce64dff8e96a920f0a6b23c |

| is_domain | False |

| name | service |

| parent_id | default |

+————-+———————————-+

[root@controller ~]# openstack project create –domain default \

> –description “Demo Project” demo

+————-+———————————-+

| Field | Value |

+————-+———————————-+

| description | Demo Project |

| domain_id | default |

| enabled | True |

| id | 4e04a6d6f37c4bd9a4a2c55266ca87f5 |

| is_domain | False |

| name | demo |

| parent_id | default |

创建demo user

[root@controller ~]# openstack user create –domain default \

> –password-prompt demo

User Password:

Repeat User Password:

+———————+———————————-+

| Field | Value |

+———————+———————————-+

| domain_id | default |

| enabled | True |

| id | fd7572fe12fc44e1a9b3870232815f34 |

| name | demo |

| options | {} |

| password_expires_at | None |

+———————+———————————-

密码设置为123456

创建 user role

[root@controller ~]# openstack role create user

+———–+———————————-+

| Field | Value |

+———–+———————————-+

| domain_id | None |

| id | a7deae7afb1347cb9854093c6dcb3567 |

| name | user |

添加demo project 的demo user role

openstack role add –project demo –user demo user

这个命令没有返回输出的。

验证操作

在管理节点上执行以下操作

- 鉴于安全因素,移除临时的token认证授权机制

# vim /etc/keystone/keystone-paste.ini

将[pipeline:public_api], [pipeline:admin_api],和[pipeline:api_v3]中的admin_token_auth移除,注意不是删除或注释该行。

- 取消设置环境变量

unset OS_AUTH_URL OS_PASSWORD

- 请求认证环

利用admin用户请求

[root@controller ~]# openstack –os-auth-url http://controller:35357/v3 –os-project-domain-name default –os-user-domain-name default –os-project-name admin –os-username admin token issue

Password:

+————+—————————————————————————————————————-+

| Field | Value |

+————+—————————————————————————————————————-+

| expires | 2018-03-17T12:54:21+0000 |

| id | gAAAAABarQHtwpHA1U5GLOdV7GNVc4p8d1NWqP9UxAIdELnrlihckPoh6HtzZ219Y68BgELxL7k0pmFr90en7xIdPsfodgymHPmyKiMMXK6i7n |

| | zKX754fQNJpMJS2Rv80T52db3YUIsc9m8r-nH63I1Z0w0GFLBhhblC9b7YfvulWAVuhklQNHQ |

| project_id | e4fbba4efcd5412fa9a944d808b2d5f7 |

| user_id | 0546c9bc04d14da4a2e87b8376467b8a |

+————+—————————————————————————————————————-+

[root@controller ~]#

利用demo用户请求

[root@controller ~]# openstack –os-auth-url http://controller:5000/v3 –os-project-domain-name default –os-user-domain-name default –os-project-name demo –os-username demo token issue

Password:

+————+—————————————————————————————————————-+

| Field | Value |

+————+—————————————————————————————————————-+

| expires | 2018-03-17T12:55:47+0000 |

| id | gAAAAABarQJD4FSUOw175T02BIFVjipVeu-BJtF8uUagyov1JmC9tDtZBMW8e61iOJ5vdy_1yfaydJUZ7yw0prV01t- |

| | hOvtCFYCzVlbrKO4IlN71LOWRuVJQggJF2o8loS9qc2TzUEiCVtYy0Om0jOGFwW7bomg8nKZZMm9MjFB4xOEfDkhtT6w |

| project_id | 4e04a6d6f37c4bd9a4a2c55266ca87f5 |

| user_id | fd7572fe12fc44e1a9b3870232815f34

创建OpenStack客户端环境脚本

前面操作是利用环境变量及OpenStack客户端命令行方式与认证服务交互,OpenStack可以通过OpenRC脚本文件来提高认证效率。

$ vim admin-openrc

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

$ vim demo-openrc

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=123456

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

可以通过执行以上脚本来快速切换工程及用户,例如:

[root@controller ~]# . admin-openrc

[root@controller ~]# openstack token issue

+————+—————————————————————————————————————-+

| Field | Value |

+————+—————————————————————————————————————-+

| expires | 2018-03-17T13:00:38+0000 |

| id | gAAAAABarQNmMCPpIhqiw5NAcWkz6mgOuQYP8oN7s7O-m44GeDOlK86-ZuGSM_sT66LCirJuR1Vt_iVbcEsBwNyMa58LNTU- |

| | FHt99m98ULWNAWaKVSCwhjWk_bicirCK8cWcBuFjB7BkbI_a5feZsYFR9U1_wbQu8QYpIZlTpnUuEkzbg4X3UFQ |

| project_id | e4fbba4efcd5412fa9a944d808b2d5f7 |

| user_id | 0546c9bc04d14da4a2e87b8376467b8a

五、镜像服务

镜像服务部署在管理节点既控制节点上。

基本配置

登录mysql客户端,创建表及用户,并授予相应的数据库权限

[root@controller ~]# mysql -u root –p123456

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@’localhost’ \

IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@’%’ \

IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@’192.168.1.50’ \

IDENTIFIED BY ‘123456’;

(注:密码自己替换)

用户认证

利用客户端脚本获取认证

$ . admin-openrc

创建glance用户

[root@controller ~]# openstack user create –domain default –password-prompt glance

User Password:123456

Repeat User Password:123456

+———————+———————————-+

| Field | Value |

+———————+———————————-+

| domain_id | default |

| enabled | True |

| id | 4609573809034384931905f9a5e4086d |

| name | glance |

| options | {} |

| password_expires_at | None

添加admin role 给glance 用户和service project

openstack role add –project service –user glance admin

这个命令没有输出

创建glance服务实体

[root@controller ~]# openstack service create –name glance \

> –description “OpenStack Image” image

+————-+———————————-+

| Field | Value |

+————-+———————————-+

| description | OpenStack Image |

| enabled | True |

| id | d567d1e32e0449bbbb651c8c4d2261c6 |

| name | glance |

| type | image |

+————-+———————————-+

创建镜像服务API端点

$ openstack endpoint create –region RegionOne \

image public http://controller:9292

$ openstack endpoint create –region RegionOne \

image internal http://controller:9292

$ openstack endpoint create –region RegionOne \

image admin http://controller:9292

安装配置

安装glance包

# yum install openstack-glance -y

配置/etc/glance/glance-api.conf

[root@controller ~]# cat /etc/glance/glance-api.conf

[DEFAULT]

[database]

connection = mysql+pymysql://glance:123456@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

红色为密码,自定义。

配置/etc/glance/glance-registry.conf

[root@controller ~]# cat /etc/glance/glance-registry.conf

[DEFAULT]

[database]

connection = mysql+pymysql://glance:123456@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123456

[paste_deploy]

flavor = keystone

服务填充至数据库

# su -s /bin/sh -c “glance-manage db_sync” glance

结束安装

#

# systemctl start openstack-glance-api.service \

openstack-glance-registry.service

验证操作

切换admin用户

$ . admin-openrc

下载源镜像

$ wget http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img

镜像上传并设置属性

[root@controller ~]# openstack image create “cirros” \

> –file cirros-0.3.5-x86_64-disk.img \

> –disk-format qcow2 –container-format bare \

> –public

+——————+——————————————————+

| Field | Value |

+——————+——————————————————+

| checksum | f8ab98ff5e73ebab884d80c9dc9c7290 |

| container_format | bare |

| created_at | 2018-03-17T14:08:06Z |

| disk_format | qcow2 |

| file | /v2/images/4cfdfbad-2e92-4b18-8c5e-e5ae07931a97/file |

| id | 4cfdfbad-2e92-4b18-8c5e-e5ae07931a97 |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros |

| owner | e4fbba4efcd5412fa9a944d808b2d5f7 |

| protected | False |

| schema | /v2/schemas/image |

| size | 13267968 |

| status | active |

| tags | |

| updated_at | 2018-03-17T14:08:06Z |

| virtual_size | None |

| visibility | public

验证是否成功

[root@controller ~]# openstack image list

+————————————–+——–+——–+

| ID | Name | Status |

+————————————–+——–+——–+

| 4cfdfbad-2e92-4b18-8c5e-e5ae07931a97 | cirros | active |

六、计算服务

OpenStack计算服务主要包括以下组件:nova-api服务、nova-api-metadata服务、nova-compute服务、nova-scheduler服务、nova-conductor模块、nova-cert模块、nova-network worker模块、nova-consoleauth模块、nova-novncproxy守护进程、nova-spicehtml5proxy守护进程、nova-xvpvncproxy守护进程、nova-cert守护进程、nova客户端、队列、SQL数据库。

管理节点(控制节点)上安装与配置

基本配置

创建数据库表及用户

[root@controller ~]# mysql -uroot -p123456

执行以下SQL命令

MariaDB [(none)]> CREATE DATABASE nova_api;

Query OK, 1 row affected (0.01 sec)

MariaDB [(none)]> CREATE DATABASE nova;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> CREATE DATABASE nova_cell0;

Query OK, 1 row affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@’localhost’ \

-> IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@’%’ IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@’localhost’ IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@’%’ IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@’localhost’ IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO ‘nova’@’%’ IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@’192.168.1.50’ IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.00 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova_api’@’192.168.1.50’ IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.01 sec)

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO ‘nova_cell0’@’192.168.1.50’ IDENTIFIED BY ‘123456’;

Query OK, 0 rows affected (0.00 sec)

密码自己替换。

切换用户获取认证

$ . admin-openrc

创建nova用户

$ openstack user create –domain default –password-prompt nova

User Password:(123456)

Repeat User Password:

增加admin角色

$ openstack role add –project service –user nova admin

创建nova服务实体

$ openstack service create –name nova –description “OpenStack Compute” compute

创建计算服务的API endpoints

$ openstack endpoint create –region RegionOne \

compute public http://controller:8774/v2.1

$ openstack endpoint create –region RegionOne \

compute internal http://controller:8774/v2.1

$ openstack endpoint create –region RegionOne \

compute admin http://controller:8774/v2.1/

openstack user create –domain default –password-prompt placement

User Password:(123456)

Repeat User Password:

添加admin角色

openstack role add –project service –user placement admin

创建api入口

openstack service create –name placement –description “Placement API” placement

openstack endpoint create –region RegionOne placement public http://controller:8778

openstack endpoint create –region RegionOne placement internal http://controller:8778

openstack endpoint create –region RegionOne placement admin http://controller:8778

安装配置

安装软件包

# yum install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler openstack-nova-placement-api -y

nova配置

修改配置文件

# vim /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123456@controller

#controller manager ip

my_ip = 192.168.1.50

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

# 123456 change database password

connection = mysql+pymysql://nova:123456@controller/nova_api

[database]

# …123456 change database password

connection = mysql+pymysql://nova:123456@controller/nova

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password =123456

[vnc]

enabled = true

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = default

project_name = service

auth_type = password

user_domain_name = default

auth_url = http://controller:35357/v3

username = placement

password =123456

增加如下内容到/etc/httpd/conf.d/00-nova-placement-api.conf (注意格式,否则http重启失败)

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

systemctl restart httpd

将配置写入到数据库中

# su -s /bin/sh -c “nova-manage api_db sync” nova

注册cell0数据库

# su -s /bin/sh -c “nova-manage cell_v2 map_cell0” nova

# su -s /bin/sh -c “nova-manage cell_v2 create_cell –name=cell1 –verbose” nova

# su -s /bin/sh -c “nova-manage db sync” nova

确认cell0和cell1 注册

[root@controller ~]# nova-manage cell_v2 list_cells

+——-+————————————–+

| Name | UUID |

+——-+————————————–+

| cell0 | 00000000-0000-0000-0000-000000000000 |

| cell1 | fcc57469-7f01-4853-9720-600301f32a2b |

+——-+————————————–+

nova服务自启动与启动

#

# systemctl start openstack-nova-api.service \

openstack-nova-consoleauth.service openstack-nova-scheduler.service \

openstack-nova-conductor.service openstack-nova-novncproxy.service

计算节点(compute)上安装与配置

在安装计算节点前需要设置OpenStack的源码包,参考 系统环境àOpenStack安装包的步骤。

安装nova compute包

# yum install openstack-nova-compute -y

在其中一台compute修改配置文件,然后拷贝到所有(若是有多台计算节点)

# vim /etc/nova/nova.conf

[DEFAULT]

my_ip = 192.168.1.51

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:123456@controller

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123456

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = default

project_name = service

auth_type = password

user_domain_name = default

auth_url = http://controller:35357/v3

username = placement

password =123456

判断是否支持虚拟机硬件加速

$ egrep -c ‘(vmx|svm)’ /proc/cpuinfo

如果返回0,标识不支持硬件加速,修改配置文件中[libvirt]选项为qemu,如果返回1或者大于1的数字,修改[libvirt]选项为kvm

[libvirt]

virt_type = qemu

服务启动

# systemctl enable libvirtd.service openstack-nova-compute.service

# systemctl start libvirtd.service openstack-nova-compute.service

所有节点确保nova.conf文件属组

问题1:利用scp命令将配置文件拷贝到其他计算节点,修改IP(自己节点的管理IP)后启动时,发现无法启动服务,错误为:Failed to open some config files: /etc/nova/nova.conf,主要原因是配置权限错误,注意权限:chown root:nova nova.conf

添加计算节点到cell database

以下命令在controller节点上操作

[root@controller ~]# . admin-openrc

[root@controller ~]# openstack hypervisor list

+—-+———————+—————–+————–+——-+

| ID | Hypervisor Hostname | Hypervisor Type | Host IP | State |

+—-+———————+—————–+————–+——-+

| 1 | compute | QEMU | 192.168.1.51 | up |

+—-+———————+—————–+————–+——-+

[root@controller ~]# su -s /bin/sh -c “nova-manage cell_v2 discover_hosts –verbose” nova

Found 2 cell mappings.

Skipping cell0 since it does not contain hosts.

Getting compute nodes from cell ‘cell1’: fcc57469-7f01-4853-9720-600301f32a2b

Found 1 computes in cell: fcc57469-7f01-4853-9720-600301f32a2b

Checking host mapping for compute host ‘compute’: be362ae7-7c5d-48ca-b6e9-40e035b7777a

Creating host mapping for compute host ‘compute’: be362ae7-7c5d-48ca-b6e9-40e035b7777a

当您添加新的计算节点时,必须运行nova-manage cellv2 discover_hosts在控制器节点上注册那些新的计算节点。或者,您可以设置一个适当的时间间隔在/etc/nova/nova.conf

[scheduler]

discover_hosts_in_cells_interval = 300

验证操作

控制节点操作

$ . admin-openrc

列出服务组件,用于验证每个过程是否成功

[root@controller ~]# openstack compute service list

+—-+——————+————+———-+———+——-+—————————-+

| ID | Binary | Host | Zone | Status | State | Updated At |

+—-+——————+————+———-+———+——-+—————————-+

| 1 | nova-scheduler | controller | internal | enabled | up | 2018-03-18T10:44:05.000000 |

| 2 | nova-consoleauth | controller | internal | enabled | up | 2018-03-18T10:44:06.000000 |

| 3 | nova-conductor | controller | internal | enabled | up | 2018-03-18T10:44:06.000000 |

| 7 | nova-compute | compute | nova | enabled | up | 2018-03-18T10:44:14.000000 |

验证endpoint API

[root@controller ~]# openstack catalog list

+———–+———–+—————————————–+

| Name | Type | Endpoints |

+———–+———–+—————————————–+

| keystone | identity | RegionOne |

| | | public: http://controller:5000/v3/ |

| | | RegionOne |

| | | internal: http://controller:5000/v3/ |

| | | RegionOne |

| | | admin: http://controller:35357/v3/ |

| | | |

| placement | placement | RegionOne |

| | | public: http://controller:8778 |

| | | RegionOne |

| | | admin: http://controller:8778 |

| | | RegionOne |

| | | public: http://controller:8778 |

| | | |

| nova | compute | RegionOne |

| | | public: http://controller:8774/v2.1 |

| | | RegionOne |

| | | admin: http://controller:8774/v2.1/ |

| | | RegionOne |

| | | internal: http://controller:8774/v2.1 |

| | | |

| glance | image | RegionOne |

| | | public: http://controller:9292 |

| | | RegionOne |

| | | internal: http://controller:9292 |

| | | RegionOne |

| | | admin: http://controller:9292 |

| | | |

列出image镜像从image service

[root@controller ~]# openstack image list

+————————————–+——–+——–+

| ID | Name | Status |

+————————————–+——–+——–+

| 4cfdfbad-2e92-4b18-8c5e-e5ae07931a97 | cirros | active |

检测cells 和placement API

[root@controller ~]# nova-status upgrade check

+—————————+

| Upgrade Check Results |

+—————————+

| Check: Cells v2 |

| Result: Success |

| Details: None |

+—————————+

| Check: Placement API |

| Result: Success |

| Details: None |

+—————————+

| Check: Resource Providers |

| Result: Success |

| Details: None |

+—————————+

七、网络服务

不同的服务器硬件网络配置选项不同。实体机与虚拟机有差别。根据自身情况进行参考官网进行自行配置。本例属于虚拟机。

管理节点(控制节点)上安装与配置

基本配置

[root@controller ~]# mysql -uroot -p123456

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@’localhost’ \

IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@’%’ \

IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@’192.168.1.50’ \

IDENTIFIED BY ‘123456’;

自行替换合适密码。

执行脚本

$ . admin-openrc

创建neutron用户及增加admin角色

$ openstack user create –domain default –password-prompt neutron

User Password:(123456)

Repeat User Password:

添加角色

$ openstack role add –project service –user neutron admin

创建neutron实体

$ openstack service create –name neutron \

–description “OpenStack Networking” network

$ openstack endpoint create –region RegionOne \

network public http://controller:9696

$ openstack endpoint create –region RegionOne \

network internal http://controller:9696

$ openstack endpoint create –region RegionOne \

network admin http://controller:9696

配置网络选项

配置网络:有Provider networks和Self-service networks两种类型可选,此处选择Self-service networks。

安装组件

# yum install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables -y

服务器组件配置(注意替换红色部分)

# vim /etc/neutron/neutron.conf

[database]

connection = mysql+pymysql://neutron:123456@controller/neutron

[DEFAULT]

core_plugin = ml2

service_plugins =

transport_url = rabbit://openstack:123456@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = True

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123456

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

# vim /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan

tenant_network_types =

mechanism_drivers = linuxbridge

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[securitygroup]

enable_ipset = true

# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = provider: eno33554992

注:eno33554992为第二块网卡设备

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

# vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

配置元数据代理

# vim /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = 123456

注:123456为自定义的字符密码,与下文nova.conf中metadata_proxy_shared_secret配置一致。

# vim /etc/nova/nova.conf 增加如下配置

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

service_metadata_proxy = true

metadata_proxy_shared_secret = 123456

结束安装

创建配置文件符号连接

# ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

配置存取到数据库

# su -s /bin/sh -c “neutron-db-manage –config-file /etc/neutron/neutron.conf \

–config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head” neutron

重启计算API服务

# systemctl restart openstack-nova-api.service

服务启动

# systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

# systemctl start neutron-server.service \

neutron-linuxbridge-agent.service neutron-dhcp-agent.service \

neutron-metadata-agent.service

所有计算节点上安装与配置

安装组件

# yum install openstack-neutron-linuxbridge ebtables ipset -y

配置通用组件

# vim /etc/neutron/neutron.conf

[DEFAULT]

auth_strategy = keystone

transport_url = rabbit://openstack:123456@controller

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123456

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

同样方法配置其他节点,利用scp复制配置文件需要修改权限

将配置文件拷贝到其他计算节点,并在其他计算节点上修改文件拥有者权限

# scp /etc/neutron/neutron.conf root@computer02:/etc/neutron/

切换到其他节点

# chown root:neutron /etc/neutron/neutron.conf

配置网络选项

# vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = provider: eno33554992

注:红色部分为PROVIDER_INTERFACE_NAME,应为本计算节点物理网卡名(除开管理网络网卡)

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

拷贝到其他计算节点上,注意权限。

配置所有计算节点利用neutron

# vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123456

结束安装

重启计算服务

# systemctl restart openstack-nova-compute.service

启动Linux桥接代理

# systemctl enable neutron-linuxbridge-agent.service

# systemctl start neutron-linuxbridge-agent.service

验证操作

在管理节点上执行以下操作

$ . admin-openrc

$ openstack extension list –network

+————————————————+—————————+————————————————+

| Name | Alias | Description |

+————————————————+—————————+————————————————+

| Default Subnetpools | default-subnetpools | Provides ability to mark and use a subnetpool |

| | | as the default |

| Availability Zone | availability_zone | The availability zone extension. |

| Network Availability Zone | network_availability_zone | Availability zone support for network. |

| Port Binding | binding | Expose port bindings of a virtual port to |

| | | external application |

| agent | agent | The agent management extension. |

| Subnet Allocation | subnet_allocation | Enables allocation of subnets from a subnet |

| | | pool |

| DHCP Agent Scheduler | dhcp_agent_scheduler | Schedule networks among dhcp agents |

| Tag support | tag | Enables to set tag on resources. |

| Neutron external network | external-net | Adds external network attribute to network |

| | | resource. |

| Neutron Service Flavors | flavors | Flavor specification for Neutron advanced |

| | | services |

| Network MTU | net-mtu | Provides MTU attribute for a network resource. |

| Network IP Availability | network-ip-availability | Provides IP availability data for each network |

| | | and subnet. |

| Quota management support | quotas | Expose functions for quotas management per |

| | | tenant |

| Provider Network | provider | Expose mapping of virtual networks to physical |

| | | networks |

| Multi Provider Network | multi-provider | Expose mapping of virtual networks to multiple |

| | | physical networks |

| Address scope | address-scope | Address scopes extension. |

| Subnet service types | subnet-service-types | Provides ability to set the subnet |

| | | service_types field |

| Resource timestamps | standard-attr-timestamp | Adds created_at and updated_at fields to all |

| | | Neutron resources that have Neutron standard |

| | | attributes. |

| Neutron Service Type Management | service-type | API for retrieving service providers for |

| | | Neutron advanced services |

| Tag support for resources: subnet, subnetpool, | tag-ext | Extends tag support to more L2 and L3 |

| port, router | | resources. |

| Neutron Extra DHCP opts | extra_dhcp_opt | Extra options configuration for DHCP. For |

| | | example PXE boot options to DHCP clients can |

| | | be specified (e.g. tftp-server, server-ip- |

| | | address, bootfile-name) |

| Resource revision numbers | standard-attr-revisions | This extension will display the revision |

| | | number of neutron resources. |

| Pagination support | pagination | Extension that indicates that pagination is |

| | | enabled. |

| Sorting support | sorting | Extension that indicates that sorting is |

| | | enabled. |

| security-group | security-group | The security groups extension. |

| RBAC Policies | rbac-policies | Allows creation and modification of policies |

| | | that control tenant access to resources. |

| standard-attr-description | standard-attr-description | Extension to add descriptions to standard |

| | | attributes |

| Port Security | port-security | Provides port security |

| Allowed Address Pairs | allowed-address-pairs | Provides allowed address pairs |

| project_id field enabled | project-id | Extension that indicates that project_id field |

| | | is enabled. |

+————————————————+—————————+————————————————+

[root@controller neutron]# openstack network agent list

+———————–+——————–+————+——————-+——-+——-+————————+

| ID | Agent Type | Host | Availability Zone | Alive | State | Binary |

+———————–+——————–+————+——————-+——-+——-+————————+

| 08c609b7-465b-4569 | Metadata agent | controller | None | True | UP | neutron-metadata-agent |

| -bfec-dab6894a19a1 | | | | | | |

| 0eda3b79-6694-4fc1-83 | Linux bridge agent | compute | None | True | UP | neutron-linuxbridge- |

| 95-7552bb9a5dc8 | | | | | | agent |

| 1a505b4d-7bac-4614-84 | Linux bridge agent | controller | None | True | UP | neutron-linuxbridge- |

| 68-455e7c916b55 | | | | | | agent |

| b9c8de85-8e58-40f9-aa | DHCP agent | controller | nova | True | UP | neutron-dhcp-agent |

| e7-bcf5b24e7d0d | | | | | | |

+———————–+——————–+————+——————-+——-+——-+————————+

在改环境下,计算节点只有一台的情况下,该条命令必须返回4条结果,3条在controller,1条在compute. 时间不同步的话,可能显示不正确。切记时钟同步。

八、Dashboard服务

控制节点安装配置

安装包

# yum install openstack-dashboard -y

修改配置

# vim /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = “controller”

ALLOWED_HOSTS = [‘*’, ]

SESSION_ENGINE = ‘django.contrib.sessions.backends.cache’

CACHES = {

‘default’: {

‘BACKEND’: ‘django.core.cache.backends.memcached.MemcachedCache’,

‘LOCATION’: ‘controller:11211’,

},

}

OPENSTACK_KEYSTONE_URL = “http://%s:5000/v3” % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

“identity”: 3,

“image”: 2,

“volume”: 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = “default”

OPENSTACK_KEYSTONE_DEFAULT_ROLE = “user”

如果选择网络模式1 provider,此处采用

OPENSTACK_NEUTRON_NETWORK = {

…

‘enable_router’: False,

‘enable_quotas’: False,

‘enable_distributed_router’: False,

‘enable_ha_router’: False,

‘enable_lb’: False,

‘enable_firewall’: False,

‘enable_vpn’: False,

‘enable_fip_topology_check’: False,

}

TIME_ZONE = “UTC”

结束安装

# systemctl restart httpd.service memcached.service

验证操作

http://controller/dashboard controller换成控制节点的ip地址。

域填写default,登录用户可为admin或demo,密码为123456

九、网络服务

创建网络

本文均是provider network。非该模式下,请自行官网。

在控制节点上,加载 admin 凭证来获取管理员能执行的命令访问权限:

[root@controller ~]# . admin-openrc

[root@controller ~]# openstack network create –share –external \

–provider-physical-network provider \

–provider-network-type flat provider

+—————————+————————————–+

| Field | Value |

+—————————+————————————–+

| admin_state_up | UP |

| availability_zone_hints | |

| availability_zones | |

| created_at | 2018-03-19T03:59:31Z |

| description | |

| dns_domain | None |

| id | 7f348c32-e7ae-4b46-b125-f45e0df4ad84 |

| ipv4_address_scope | None |

| ipv6_address_scope | None |

| is_default | None |

| mtu | 1500 |

| name | provider |

| port_security_enabled | True |

| project_id | e4fbba4efcd5412fa9a944d808b2d5f7 |

| provider:network_type | flat |

| provider:physical_network | provider |

| provider:segmentation_id | None |

| qos_policy_id | None |

| revision_number | 4 |

| router:external | External |

| segments | None |

| shared | True |

| status | ACTIVE |

| subnets | |

| updated_at | 2018-03-19T03:59:32Z |

+—————————+————————————–+

创建子网

根据实际情况自行修改,起始IP,dns,网关等信息

[root@controller ~]# openstack subnet create –network provider –allocation-pool start=192.168.1.120,end=192.168.1.130 –dns-nameserver 192.168.0.1 –gateway 192.168.0.1 –subnet-range 192.168.0.0/16 provider

+——————-+————————————–+

| Field | Value |

+——————-+————————————–+

| allocation_pools | 192.168.1.120-192.168.1.130 |

| cidr | 192.168.0.0/16 |

| created_at | 2018-03-19T04:00:03Z |

| description | |

| dns_nameservers | 192.168.0.1 |

| enable_dhcp | True |

| gateway_ip | 192.168.0.1 |

| host_routes | |

| id | ebac3f42-006f-4ed1-b3b1-915fb467eb28 |

| ip_version | 4 |

| ipv6_address_mode | None |

| ipv6_ra_mode | None |

| name | provider |

| network_id | 7f348c32-e7ae-4b46-b125-f45e0df4ad84 |

| project_id | e4fbba4efcd5412fa9a944d808b2d5f7 |

| revision_number | 2 |

| segment_id | None |

| service_types | |

| subnetpool_id | None |

| updated_at | 2018-03-19T04:00:03Z |

+——————-+————————————–+

创建m1.nano规格的实例类型

默认的最小规格的主机需要512 MB内存。对于环境中计算节点内存不足4 GB的,我们推荐创建只需要64 MB的“m1.nano“规格的主机。若单纯为了测试的目的,请使用“m1.nano“规格的主机来加载CirrOS镜像

[root@controller ~]# openstack flavor create –id 0 –vcpus 1 –ram 64 –disk 1 m1.nano

生成一个键值对

大部分云镜像支持公共密钥认证而不是传统的密码认证。在启动实例前,你必须添加一个公共密钥到计算服务。

导入admin的凭证

[root@controller ~]# . admin-openrc

生成和添加秘钥对

使用本身存在的公钥,若没有则创建

[root@controller ~]# ssh-keygen -t rsa

敲三个回车,默认即可。

[root@controller ~]# openstack keypair create –public-key ~/.ssh/id_rsa.pub mykey

+————-+————————————————-+

| Field | Value |

+————-+————————————————-+

| fingerprint | e3:f3:b4:f0:da:28:f5:23:11:7c:87:7c:50:24:9b:81 |

| name | mykey |

| user_id | 0546c9bc04d14da4a2e87b8376467b8a |

+————-+————————————————-+

验证公钥的添加

[root@controller ~]# openstack keypair list

+——-+————————————————-+

| Name | Fingerprint |

+——-+————————————————-+

| mykey | e3:f3:b4:f0:da:28:f5:23:11:7c:87:7c:50:24:9b:81 |

+——-+————————————————-+

增加安全组规则

默认情况下, “default“安全组适用于所有实例并且包括拒绝远程访问实例的防火墙规则。对诸如CirrOS这样的Linux镜像,我们推荐至少允许ICMP (ping) 和安全shell(SSH)规则。

允许ICMP(ping):

[root@controller ~]# openstack security group rule create –proto icmp default

允许安全shell(ssh)的访问:

[root@controller ~]# openstack security group rule create –proto tcp –dst-port 22 default

十、块存储服务

管理(控制节点)节点上安装与配置

基本配置

[root@controller ~]# mysql -uroot -p123456

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@’localhost’ \

IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@’%’ \

IDENTIFIED BY ‘123456’;

GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@’192.168.1.50’ \

IDENTIFIED BY ‘123456’;

$ . admin-openrc

$ openstack user create –domain default –password-prompt cinder

User Password:(123456)

Repeat User Password:

$ openstack role add –project service –user cinder admin

$ openstack service create –name cinderv2 \

–description “OpenStack Block Storage” volumev2

$ openstack service create –name cinderv3 \

–description “OpenStack Block Storage” volumev3

创建cinder服务的API endpoints

$ openstack endpoint create –region RegionOne \

volumev2 public http://controller:8776/v2/%\(project_id\)s

$ openstack endpoint create –region RegionOne \

volumev2 internal http://controller:8776/v2/%\(project_id\)s

$ openstack endpoint create –region RegionOne \

volumev2 admin http://controller:8776/v2/%\(project_id\)s

$ openstack endpoint create –region RegionOne \

volumev3 public http://controller:8776/v3/%\(project_id\)s

$ openstack endpoint create –region RegionOne \

volumev3 internal http://controller:8776/v3/%\(project_id\)s

$ openstack endpoint create –region RegionOne \

volumev3 admin http://controller:8776/v3/%\(project_id\)s

安装配置组件

# yum install openstack-cinder -y

# vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:123456@controller/cinder

[DEFAULT]

transport_url = rabbit://openstack:123456@controller

auth_strategy = keystone

my_ip = 192.168.1.50

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = 123456

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

写入数据库

# su -s /bin/sh -c “cinder-manage db sync” cinder

修改计算配置,新增如下

# vim /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

重新启动nova

# systemctl restart openstack-nova-api.service

启动cinder

# systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

# systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

所有存储节点上安装与配置

(本例存储节点即为block_swift节点)块存储节点使用的是本地磁盘通过lvm管理提供,本例中就一块磁盘sdb。其他接口方式请查看官网指导手册。

安装lvm

[root@block_swift ~]# yum install lvm2 -y

[root@block_swift ~]#systemctl enable lvm2-lvmetad.service

[root@block_swift ~]# systemctl start lvm2-lvmetad.service

配置lvm

# pvcreate /dev/sdb

# vgcreate cinder-volumes /dev/sdb

添加过滤规则

编辑/etc/lvm/lvm.conf文件 修改如下:

devices {

…

filter = [ “a/sdb/”, “r/.*/”]

Cinder安装包

# yum install openstack-cinder targetcli python-keystone -y

修改配置文件

# vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:123456@controller/cinder

[DEFAULT]

transport_url = rabbit://openstack:123456@controller

auth_strategy = keystone

enabled_backends = lvm

glance_api_servers = http://controller:9292

my_ip = 192.168.1.52

注明:IP为当前存储节点的管理网IP

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

iscsi_protocol = iscsi

iscsi_helper = lioadm

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = 123456

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

服务启动

# systemctl enable openstack-cinder-volume.service target.service

# systemctl start openstack-cinder-volume.service target.service

验证操作

在控制节点上

# . admin-openrc

$ openstack volume service list

+——————+—————–+——+———+——-+—————————-+

| Binary | Host | Zone | Status | State | Updated At |

+——————+—————–+——+———+——-+—————————-+

| cinder-scheduler | controller | nova | enabled | up | 2018-03-19T02:42:02.000000 |

| cinder-volume | block_swift@lvm | nova | enabled | up | 2018-03-19T02:41:47.000000 |

web端创建实例

根据web端提示一步一步的创建即可。

支持openstack基础环境搭建完成,相关虚拟机的功能测试可以开始。

遇到的问题:在web端进入到云主机的控制端无法获取DNS地址。

解决:在计算节点上修改nova.conf文件vnc模块中将controller改为其IP 192.168.1.50地址 重启nova服务

systemctl restart openstack-nova-compute.service

11、对象存储服务(swift)

OpenStack对象存储是一个多租户对象存储系统。它具有高度的可扩展性,可以通过RESTful HTTP API以低成本管理大量非结构化数据。本例对象存储节点就是block_swift节点,其中sdc磁盘作为对象存储的存储设备。实际生产环境中,考虑到对象的安全性,对象存储都是有冗余设备的,包括网关节点也是如此。具体详情自行官网。本例尽是最基础的功能部署,以及基础功能验证对接。

在控制节点上部署

. admin-openrc

创建swift用户

[root@controller ~]# openstack user create –domain default –password-prompt swift

User Password:123456

Repeat User Password:

+———————+———————————-+

| Field | Value |

+———————+———————————-+

| domain_id | default |

| enabled | True |

| id | 00e0170c54a14b48b1c63e95b1e75adf |

| name | swift |

| options | {} |

| password_expires_at | None |

赋予admin角色

[root@controller ~]# openstack role add –project service –user swift admin

创建swift服务实体

[root@controller ~]# openstack service create –name swift –description “OpenStack Object Storage” object-store

创建对象存储服务API endpoints

[root@controller ~]# openstack endpoint create –region RegionOne \

> object-store public http://controller:8080/v1/AUTH_%\(tenant_id\)s

+————–+———————————————-+

| Field | Value |

+————–+———————————————-+

| enabled | True |

| id | 980f19b935144e069cf28bff8dea63d9 |

| interface | public |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 31f12ef9cf0a498496e6739388fa6481 |

| service_name | swift |

| service_type | object-store |

| url | http://controller:8080/v1/AUTH_%(tenant_id)s |

+————–+———————————————-+

[root@controller ~]# openstack endpoint create –region RegionOne \

> object-store internal http://controller:8080/v1/AUTH_%\(tenant_id\)s

+————–+———————————————-+

| Field | Value |

+————–+———————————————-+

| enabled | True |

| id | 8c4f7ef4d9614f0c85154968f0ce8b1d |

| interface | internal |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 31f12ef9cf0a498496e6739388fa6481 |

| service_name | swift |

| service_type | object-store |

| url | http://controller:8080/v1/AUTH_%(tenant_id)s |

+————–+———————————————-+

[root@controller ~]# openstack endpoint create –region RegionOne \

> object-store admin http://controller:8080/v1

+————–+———————————-+

| Field | Value |

+————–+———————————-+

| enabled | True |

| id | 8672d43ac69346f0b0129fde4b4f2230 |

| interface | admin |

| region | RegionOne |

| region_id | RegionOne |

| service_id | 31f12ef9cf0a498496e6739388fa6481 |

| service_name | swift |

| service_type | object-store |

| url | http://controller:8080/v1 |

+————–+———————————-+

安装软件包

# [root@controller ~]# yum install openstack-swift-proxy python-swiftclient \

python-keystoneclient python-keystonemiddleware \

memcached -y

下载配置文件

[root@controller ~]# curl -o /etc/swift/proxy-server.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/proxy-server.conf-sample?h=stable/ocata

编辑/etc/swift/proxy-server.conf文件在原有基础上进行修改或者增减。切记一定的仔细。

[DEFAULT]

…

bind_port = 8080

user = swift

swift_dir = /etc/swift

[pipeline:main]

pipeline = catch_errors gatekeeper healthcheck proxy-logging cache container_sync bulk ratelimit authtoken keystoneauth container-quotas account-quotas slo dlo versioned_writes proxy-logging proxy-server

[app:proxy-server]

use = egg:swift#proxy

…

account_autocreate = True

[filter:keystoneauth]

use = egg:swift#keystoneauth

…

operator_roles = admin,user

[filter:authtoken]

paste.filter_factory = keystonemiddleware.auth_token:filter_factory

…

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = swift

password =123456

delay_auth_decision = True

[filter:cache]

use = egg:swift#memcache

…

memcache_servers = controller:11211

在存储节点上部署

本文中swift节点就是block_swift节点192.168.1.52.本例配置单块磁盘作为对象存储磁盘sdc.仅做功能测试使用。若是有多个对象存储节点,具体配置步骤参考官网。

安装xfs文件系统基础包和rsync. 本例中因为只有单块盘,没法做到rsync同步。

# yum install xfsprogs rsync

格式化sdc磁盘

mkfs.xfs /dev/sdc

创建挂载点

# mkdir -p /srv/node/sdc

添加fstab

/dev/sdc /srv/node/sdc xfs noatime,nodiratime,nobarrier,logbufs=8 0 2

挂载

mount /dev/sdc /srv/node/sdc/

安装包

yum install openstack-swift-account openstack-swift-container \

openstack-swift-object -y

下载配置文件

# curl -o /etc/swift/account-server.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/account-server.conf-sample?h=stable/ocata

# curl -o /etc/swift/container-server.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/container-server.conf-sample?h=stable/ocata

# curl -o /etc/swift/object-server.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/object-server.conf-sample?h=stable/ocata

编辑/etc/swift/account-server.conf 其他勿删

[DEFAULT]

…

bind_ip = 192.168.1.52

bind_port = 6202

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon account-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

编辑/etc/swift/container-server.conf 注意勿删默认

[DEFAULT]

…

bind_ip = 192.168.1.52

bind_port = 6201

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon container-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

编辑/etc/swift/object-server.conf

[DEFAULT]

…

bind_ip = 192.168.1.52

bind_port = 6200

user = swift

swift_dir = /etc/swift

devices = /srv/node

mount_check = True

[pipeline:main]

pipeline = healthcheck recon object-server

[filter:recon]

use = egg:swift#recon

recon_cache_path = /var/cache/swift

recon_lock_path = /var/lock

赋权限

# chown -R swift:swift /srv/node

创建缓存目录

# mkdir -p /var/cache/swift

# chown -R root:swift /var/cache/swift

# chmod -R 775 /var/cache/swift

创建account环

在控制器节点上执行这些步骤。

切换到/etc/swift目录

创建account.builder文件

# swift-ring-builder account.builder create 10 1 1

####10表示1024个目录数,中间的1表示副本数,最后的1表示在多分区最小移动时间1小时

添加存储节点到ring中

# swift-ring-builder account.builder add \

–region 1 –zone 1 –ip 192.168.1.52 –port 6202 –device sdc –weight 100

Device d0r1z1-192.168.1.52:6202R192.168.1.52:6202/sdc_”” with 100.0 weight got id 0

####有多个主机节点的话,或者多个磁盘的话,重复执行几次。修改device设备和ip地址即可本例只有单主机 单磁盘。

确认并重调整

[root@controller swift]# swift-ring-builder account.builder

account.builder, build version 1

1024 partitions, 1.000000 replicas, 1 regions, 1 zones, 1 devices, 100.00 balance, 0.00 dispersion

The minimum number of hours before a partition can be reassigned is 1 (0:00:00 remaining)

The overload factor is 0.00% (0.000000)

Ring file account.ring.gz not found, probably it hasn’t been written yet

Devices: id region zone ip address:port replication ip:port name weight partitions balance flags meta

0 1 1 192.168.1.52:6202 192.168.1.52:6202 sdc 100.00 0 -100.00

[root@controller swift]# swift-ring-builder account.builder rebalance

Reassigned 1024 (100.00%) partitions. Balance is now 0.00. Dispersion is now 0.00

创建容器环

切换到/etc/swift目录

# swift-ring-builder container.builder create 10 1 1

添加存储节点至ring

# swift-ring-builder container.builder add \

–region 1 –zone 1 –ip 192.168.1.52 –port 6201 –device sdc –weight 100

确认并重调整

# swift-ring-builder container.builder

# swift-ring-builder container.builder rebalance

创建对象环

切换到/etc/swift目录

# swift-ring-builder object.builder create 10 1 1

添加存储节点至ring

# swift-ring-builder object.builder add –region 1 –zone 1 –ip 192.168.1.52 –port 6200 –device sdc –weight 100

确认并重调整

# swift-ring-builder object.builder

# swift-ring-builder object.builder rebalance

复制当前目录文件account.ring.gz, container.ring.gz, 和 object.ring.gz到每个存储节点/etc/swift目录

本例存储节点就一台,192.168.1.52

[root@controller swift]# scp account.ring.gz container.ring.gz object.ring.gz root@192.168.1.52:/etc/swift

The authenticity of host ‘192.168.1.52 (192.168.1.52)’ can’t be established.

ECDSA key fingerprint is SHA256:2cGEDBy4gxA4KS46Yzrf7m4dhDeLWQ9zSbYgiBN8OeQ.

ECDSA key fingerprint is MD5:64:47:33:5b:a1:88:7a:92:d4:d5:13:7f:73:d2:81:0d.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added ‘192.168.1.52’ (ECDSA) to the list of known hosts.

root@192.168.1.52’s password:

account.ring.gz 100% 206 4.5KB/s 00:00

container.ring.gz 100% 208 7.4KB/s 00:00

object.ring.gz

其他部署操作

控制节点部署

下载配置文件

curl -o /etc/swift/swift.conf https://git.openstack.org/cgit/openstack/swift/plain/etc/swift.conf-sample?h=stable/ocata

编辑配置文件/etc/swift/swift.conf

[swift-hash]

swift_hash_path_suffix = 123456

swift_hash_path_prefix = 123456

替换密码

[storage-policy:0]

name = Policy-0

default = yes

复制文件

复制swift.conf文件到每个存储节点上和其他节点运行proxy-servie服务的节点(一般指控制节点)

scp swift.conf root@192.168.1.52:/etc/swift/

修改权限(控制节点和存储节点)

chown -R root:swift /etc/swift

开启服务

# systemctl enable openstack-swift-proxy.service memcached.service

# systemctl start openstack-swift-proxy.service memcached.service

存储节点开启服务

本例为192.168.1.52 block_swift节点

# systemctl enable openstack-swift-account.service openstack-swift-account-auditor.service \

openstack-swift-account-reaper.service openstack-swift-account-replicator.service

# systemctl start openstack-swift-account.service openstack-swift-account-auditor.service \

openstack-swift-account-reaper.service openstack-swift-account-replicator.service

# systemctl enable openstack-swift-container.service \

openstack-swift-container-auditor.service openstack-swift-container-replicator.service \

openstack-swift-container-updater.service

# systemctl start openstack-swift-container.service \

openstack-swift-container-auditor.service openstack-swift-container-replicator.service \

openstack-swift-container-updater.service

# systemctl enable openstack-swift-object.service openstack-swift-object-auditor.service \

openstack-swift-object-replicator.service openstack-swift-object-updater.service

# systemctl start openstack-swift-object.service openstack-swift-object-auditor.service \

openstack-swift-object-replicator.service openstack-swift-object-updater.service

验证

控制节点操作

创建目录并赋权限

mkdir –p /srv/node

# chcon -R system_u:object_r:swift_data_t:s0 /srv/node

查看服务状态

[root@controller ~]# swift stat

Account: AUTH_e4fbba4efcd5412fa9a944d808b2d5f7

Containers: 0

Objects: 0

Bytes: 0

X-Put-Timestamp: 1521447468.56468

X-Timestamp: 1521447468.56468

X-Trans-Id: tx9f6f28e2a2c44746b5456-005aaf722b

Content-Type: text/plain; charset=utf-8

X-Openstack-Request-Id: tx9f6f28e2a2c44746b5456-005aaf722b

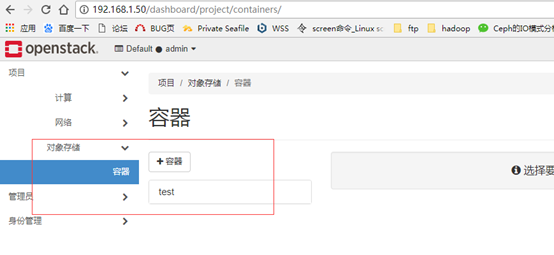

创建容器test

[root@controller ~]# openstack container create test

+—————————————+———–+————————————+

| account | container | x-trans-id |

+—————————————+———–+————————————+

| AUTH_e4fbba4efcd5412fa9a944d808b2d5f7 | test | tx41401038435944a2ad460-005aaf7287 |

web端查看

web上传文件后端确认

后端确认

[root@controller ~]# openstack object list test

+———–+

| Name |

+———–+

| zhang.txt |

+———–+

至此openstack swift最基础环境已部署完成。

12、对接ceph rbd(适合M版)

将glance image vm后端本地存储通过libvirt接口替换为ceph 存储池。

创建Pool

ceph集群部署过程略,本例为3台虚拟机部署ceph集群,分别为

radosgw1 192.168.1.31 mon mds osd

radosgw2 192.168.1.32 mon mds osd

radosgw3 192.168.1.32 mon mds osd

所有操作ceph节点选择radosgw1

# ceph osd pool create volumes 64

# ceph osd pool create images 64

# ceph osd pool create vms 64

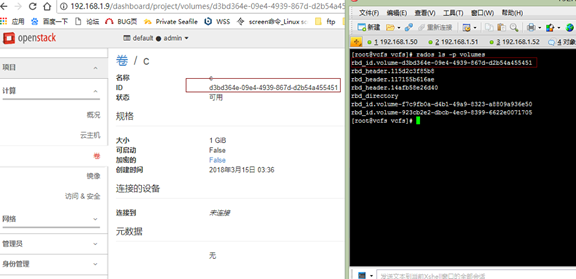

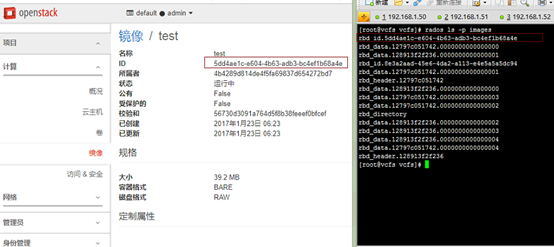

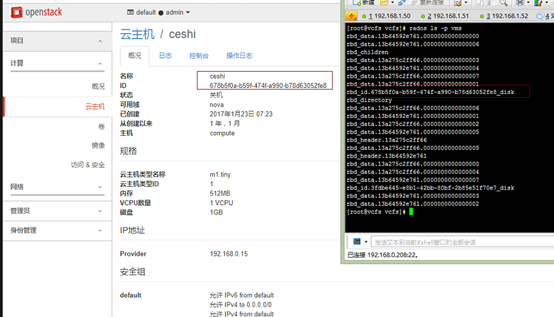

初期查看对象 均没有对象

[root@radosgw1 ~]# rados ls -p images

[root@radosgw1 ~]# rados ls -p vms

[root@radosgw1 ~]# rados ls -p volumes

安装Ceph Client包

配置centos7 ceph yum源

在控制节点 计算节点 存储节点上部署ceph yum源

创建/etc/yum.repos.d/ceph.repo文件

[ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/x86_64/

enabled=1

priority=1

gpgcheck=1

type=rpm-md

gpgkey=http://mirrors.aliyun.com/ceph/keys/release.asc

[ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/

enabled=1

priority=1

gpgcheck=1

type=rpm-md

gpgkey=http://mirrors.aliyun.com/ceph/keys/release.asc

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-jewel/el7/SRPMS/

enabled=1

priority=1

gpgcheck=1

type=rpm-md

gpgkey=http://mirrors.aliyun.com/ceph/keys/release.asc

在glance-api(控制节点)节点上

yum install python-rbd -y

在nova-compute (计算节点)节点上

yum install ceph-common -y

在cinder-volume(存储节点)节点上

yum install ceph-common -y

openstack安装Ceph客户端认证

集群ceph存储端radosgw1节点上操作

注意输入密码

[root@radosgw1 ~]#ssh controller sudo tee /etc/ceph/ceph.conf < /etc/ceph/ceph.conf

[root@radosgw1 ~]# ssh compute sudo tee /etc/ceph/ceph.conf < /etc/ceph/ceph.conf

[root@radosgw1 ~]# ssh block_swift sudo tee /etc/ceph/ceph.conf < /etc/ceph/ceph.conf

如果开启了cephx authentication,需要为Nova/Cinder and Glance创建新的用户,如下

ceph auth get-or-create client.cinder mon ‘allow r’ osd ‘allow class-read object_prefix rbd_children, allow rwx pool=volumes, allow rwx pool=vms, allow rx pool=images’

ceph auth get-or-create client.glance mon ‘allow r’ osd ‘allow class-read object_prefix rbd_children, allow rwx pool=images’

为client.glance添加keyring,如下

ceph auth get-or-create client.glance | ssh controller sudo tee /etc/ceph/ceph.client.glance.keyring

root@controller’s password:

[client.glance]

key = AQBL9adayn/LDRAA3wkYi0GTP2NbxHMUt9dHaQ==

ssh controller sudo chown glance:glance /etc/ceph/ceph.client.glance.keyring

为client.cinder添加keyring,如下

ceph auth get-or-create client.cinder | ssh block_swift sudo tee /etc/ceph/ceph.client.cinder.keyring

root@block_swift’s password:

[client.cinder]

key = AQA29adad8IPCRAAW5kqw1/H3EDoiOwOaMjMUA==

ssh block_swift sudo chown cinder:cinder /etc/ceph/ceph.client.cinder.keyring

为计算节点添加keyring文件

[root@radosgw1 ~]# ceph auth get-or-create client.cinder | ssh compute sudo tee /etc/ceph/ceph.client.cinder.keyring

root@compute’s password:

[client.cinder]

key = AQA29adad8IPCRAAW5kqw1/H3EDoiOwOaMjMUA==

为nova-compute节点上创建临时密钥,该文件在计算节点的/root下面

ceph auth get-key client.cinder | ssh compute tee client.cinder.key

在所有计算节点上(本例就只有一台计算节点compute)执行如下操作(多台计算节点该key一致):

[root@compute ~]# uuidgen

35d4dd62-4510-49d2-a9b3-0a7a06187802

在/root下面创建文件secret.xml

[root@compute ~]# cat secret.xml

<secret ephemeral=’no’ private=’no’>

<uuid>35d4dd62-4510-49d2-a9b3-0a7a06187802</uuid>

<usage type=’ceph’>

<name>client.cinder secret</name>

</usage>

</secret>

[root@compute ~]# virsh secret-define –file secret.xml

Secret 35d4dd62-4510-49d2-a9b3-0a7a06187802 created

以下—base64 后的秘钥为计算节点上/root目录下的client.cinder.key内容。是之前为计算节点创建的临时秘钥文件

[root@compute ~]# virsh secret-set-value –secret 35d4dd62-4510-49d2-a9b3-0a7a06187802 –base64 AQA29adad8IPCRAAW5kqw1/H3EDoiOwOaMjMUA==

Secret value set

[root@compute ~]#rm –f client.cinder.key secret.xml

Openstack配置

在控制节点操作

vim /etc/glance/glance-api.conf

[DEFAULT]

…

default_store = rbd

show_image_direct_url = True

show_multiple_locations = True

…

[glance_store]

stores = rbd

default_store = rbd

rbd_store_pool = images

rbd_store_user = glance

rbd_store_ceph_conf = /etc/ceph/ceph.conf

rbd_store_chunk_size = 8

在存储节点操作

vim /etc/cinder/cinder.conf

[DEFAULT]

保留之前的

#enabled_backends = lvm

enabled_backends = ceph

#glance_api_version = 2

…

[ceph]

volume_driver = cinder.volume.drivers.rbd.RBDDriver

rbd_pool = volumes

rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_flatten_volume_from_snapshot = false

rbd_max_clone_depth = 5

rbd_store_chunk_size = 4

rados_connect_timeout = -1

glance_api_version = 2

rbd_user = cinder

rbd_secret_uuid =536f43c1-d367-45e0-ae64-72d987417c91

请注意,每个计算节点设置同一个uuid即可。本例只有一个计算节点

注意,如果配置多个cinder后端,glance_api_version = 2必须添加到[DEFAULT]中。本例注释了

每个计算节点上,设置/etc/nova/nova.conf

vim /etc/nova/nova.conf

[libvirt]

virt_type = qemu

hw_disk_discard = unmap

images_type = rbd

images_rbd_pool = vms

images_rbd_ceph_conf = /etc/ceph/ceph.conf

rbd_user = cinder

rbd_secret_uuid = 536f43c1-d367-45e0-ae64-72d987417c91

disk_cachemodes=”network=writeback”

libvirt_inject_password = false

libvirt_inject_key = false

libvirt_inject_partition = -2

live_migration_flag=VIR_MIGRATE_UNDEFINE_SOURCE, VIR_MIGRATE_PEER2PEER, VIR_MIGRATE_LIVE, VIR_MIGRATE_TUNNELLED

重启OpenStack

控制节点

systemctl restart openstack-glance-api.service

计算节点

systemctl restart openstack-nova-compute.service

存储节点

systemctl restart openstack-cinder-volume.service

验证

openstack web管理端创建卷,集群后台查看

创建images镜像

创建虚拟机

13、对接radosgw swift

控制节点操作

查看swift接口的endpoint,并删除

openstack endpoint list | grep swift

openstack endpoint delete 00bd2daf82a94855b768522e91451e0f

openstack endpoint delete 9377a725952d403c9f48c285f1319426

openstack endpoint delete b2e78493a57145fc95fa825823b883ba

创建radosgw swift 网关接口的endpoint

openstack endpoint create –region RegionOne object-store public “http://192.168.1.31:7480/swift/v1”

openstack endpoint create –region RegionOne object-store admin “http://192.168.1.31:7480/swift/v1”

openstack endpoint create –region RegionOne object-store internal http://192.168.1.31:7480/swift/v1

验证查看

[root@controller ~]# openstack endpoint list |grep swift

| 9927ac58af304ab28b3a95bbae098eb5 | RegionOne | swift | object-store | True | public | http://192.168.1.31:7480/swift/v1 |

| 9bf06eaf08654bc18bf036792eb7f3e0 | RegionOne | swift | object-store | True | admin | http://192.168.1.31:7480/swift/v1 |

| dd033683069144b29136c05ecc5c8a82 | RegionOne | swift | object-store | True | internal | http://192.168.1.31:7480/swift/v1

swift容器为空

[root@controller ~]# swift list

[root@controller ~]# swift stat

Account: v1

Containers: 0

Objects: 0

Bytes: 0

X-Timestamp: 1521528791.35801

X-Account-Bytes-Used-Actual: 0

X-Trans-Id: tx000000000000000000019-005ab0afd6-8dd2cc-default

Content-Type: text/plain; charset=utf-8

Accept-Ranges: bytes

[root@controller ~]#

网关节点radosgw1操作

编辑radosgw网关节点(本例为radosgw1 192.168.1.31)集群配置文件ceph.conf文件,在网关节点配置下面增加如下内容(灰色):

[client.rgw.radosgw1.7480]

host = radosgw1

rgw_frontends = civetweb port=7480

rgw_content_length_compat = true

rgw_keystone_url = http://192.168.1.50:5000

rgw_keystone_admin_user = admin

rgw_keystone_admin_password = 123456

rgw_keystone_admin_tenant = admin

rgw_keystone_accepted_roles = admin

rgw_keystone_token_cache_size = 10

rgw_keystone_revocation_interval = 300

rgw_keystone_make_new_tenants = true

rgw_s3_auth_use_keystone = true

rgw_keystone_verify_ssl = false

其中192.168.1.50:5000为控制节点IP端口。重启radosgw服务

[root@radosgw1 ~]# systemctl restart vcfs-radosgw@rgw.radosgw1.7480

验证

新建容器

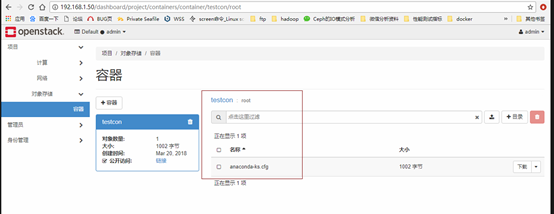

控制节点上,web端创建一个容器testcon

命令查看,容器数量为1

[root@controller ~]# swift stat

Account: v1

Containers: 1

Objects: 0

Bytes: 0

X-Timestamp: 1521529665.74975

X-Account-Bytes-Used-Actual: 0

X-Trans-Id: tx00000000000000000002e-005ab0b341-8dd2cc-default

Content-Type: text/plain; charset=utf-8

Accept-Ranges: bytes

[root@controller ~]#

radosgw节点查看

[root@radosgw1 ~]# radosgw-admin bucket list

[

“my-new-bucket”,

“testcon”,

“vcluster”

]

看到新增容器testcon.

上传数据

控制节点上传数据

[root@controller ~]# swift upload testcon /root/anaconda-ks.cfg

root/anaconda-ks.cfg

集群节点查看数据

[root@radosgw1 ~]# rados ls -p default.rgw.buckets.data

55dc7280-f601-4fc0-b7af-45cb2acb3c7a.9272435.1_root/anaconda-ks.cfg

web端刷新查看

可以看到新增的文件

至此,openstack 对接ceph swift功能对接成功。